Troubleshooting MIG on HPE Ezmeral Runtime Enterprise

Troubleshooting tips for verifying MIG installation and configuration in Kubernetes deployments of HPE Ezmeral Runtime Enterprise.

These troubleshooting tips apply to the deployment of MIG devices in Kubernetes deployments of HPE Ezmeral Runtime Enterprise.

Verifying Matching bdconfig and nvidia-smi Output

On the GPU host, verify that the information about GPU and MIG returned by

bdconfig matches the GPU and MIG information returned by

nvidia-smi.

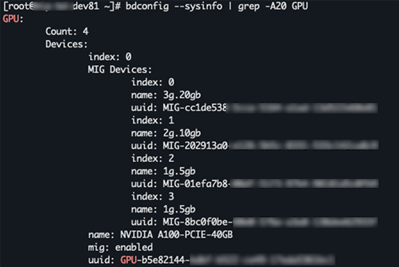

Example bdconfig --sysinfo command and output:

Example nvidia-smi -L command and output:

sudo nvidia-smi -L GPU 0: NVIDIA A100-PCIE-40GB (UUID: GPU-b5e82144-xxxx-xxxx-xxxx-xxxxxxxxxxxx) MIG 3g.20gb Device 0: (UUID: MIG-cc1de538-xxxx-xxxx-xxxx-xxxxxxxxxxxx) MIG 2g.10gb Device 1: (UUID: MIG-202913a0-xxxx-xxxx-xxxx-xxxxxxxxxxxx) MIG 1g.5gb Device 2: (UUID: MIG-01efa7b8-xxxx-xxxx-xxxx-xxxxxxxxxxxx) MIG 1g.5gb Device 3: (UUID: MIG-8bc0f0be-xxxx-xxxx-xxxx-xxxxxxxxxxxx)

Verifying GPU Node Labels

On worker nodes that have MIG-enabled GPUs, verify the node labels:

"hpe.com/mig,strategy": "single",or"hpe.com/mig,strategy": "mixed",- The

nivida.com/gpu.productlabel specifies a MIG-enabled GPU and MIG configuration. For example:"nivida.com/gpu.product": "NVIDIA-A100-PCIE-40GB-MIG-1g.5gb"

Verifying That the Required Pods Are Running

Verify that the nvidia-device-plugin,

gpu-feature-discovery, nfd-worker, and

nfd-master pods are running on all nodes that have MIG-enabled GPUs.

kubectl get nodes

kubectl -n kube-system get pods -o wide | grep nvidia

kubectl -n kube-system get pods -o wide | grep nfd

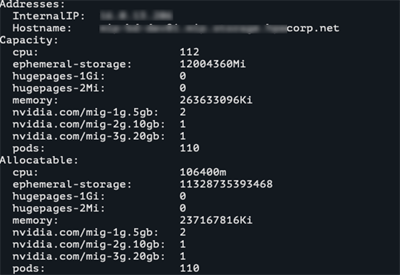

Verifying GPU Resources on Worker Nodes

Use the kubectl describe node command to verify that the MIG resources are

allocatable on worker nodes.

The following example shows output when the mixed strategy is

configured:

Verifying the Number of NVIDIA DaemonSets

The NVIDIA plugin pods are automatically configured on hosts with GPU resources. They are not present on non-GPU hosts. The NVIDIA plugin pod enables GPU reservation in application YAML files and is deployed as four DaemonSets:

nvidia-device-plugin-mixednvidia-device-plugin-singlegpu-feature-discovery-mixedgpu-feature-discovery-single

To list the NVIDIA device plugin DaemonSets, execute the following commands on the Kubernetes master:

kubectl get -n kube-system ds -l app.kubernetes.io/name=nvidia-device-pluginkubectl get -n kube-system ds -l app.kubernetes.io/name=gpu-feature-discoveryThe following example shows the output when there is one GPU node configured to use the

mixed strategy:

kubectl get -n kube-system ds -l app.kubernetes.io/name=nvidia-device-plugin NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE nvidia-device-plugin-mixed 1 1 1 1 1 11d nvidia-device-plugin-single 0 0 0 0 0 11d kubectl get -n kube-system ds -l app.kubernetes.io/name=gpu-feature-discovery NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE gpu-feature-discovery-mixed 1 1 1 1 1 feature.node.kubernetes.io/pci-10de.present=true 11d gpu-feature-discovery-single 0 0 0 0 0 feature.node.kubernetes.io/pci-10de.present=true 11d

Verifying MIG Configuration After Host Reboot

You can verify that the MIG configuration was restored after a reboot by executing the following command:

sudo service bds-nvidia-mig-config statusThe output of the command is similar to the following:

Redirecting to /bin/systemctl status bds-nvidia-mig-config.service

bds-nvidia-mig-config.service - Oneshot service to re-create NVIDIA MIG devices

Loaded: loaded (/usr/lib/systemd/system/bds-nvidia-mig-config.service; enabled; vendor preset: disabled)

Active: active (exited) since Sat 2022-12-10 18:34:11 PST; 1 weeks 3 days ago

Main PID: 2164 (code=exited, status=0/SUCCESS)

Tasks: 0

Memory: 0B

CGroup: /system.slice/bds-nvidia-mig-config.service

Dec 10 18:33:01 mynode-88.mycorp.net systemd[1]: Starting Oneshot service to re-create NVIDIA MI...

Dec 10 18:34:11 mynode-88.mycorp.net python[2164]: MIG command 'nvidia-smi mig -i 0 -lgi' failed...

Dec 10 18:34:11 mynode-88.mycorp.net python[2164]: Failed getting current MIG configuration on G...

Dec 10 18:34:11 mynode-88.mycorp.net python[2164]: Got stored GPU MIG configuration. Trying to r...

Dec 10 18:34:11 mynode-88.mycorp.net python[2164]: Restoring MIG configuration on GPU 0: '[{u'start...

Dec 10 18:34:11 mynode-88.mycorp.net python[2164]: MIG configuration on GPU 0 restored successfully.

Dec 10 18:34:11 mynode-88.mycorp.net systemd[1]: Started Oneshot service to re-create NVIDIA MIG...bds-nvidia-mig-config Service

bds-nvidia-mig-config service is a systemd service that preserves the MIG

device configurations across system reboots.

You can check its status and examine its logs by executing commands such as the following:

systemd status bds-nvidia-mig-configjournalctl -u bds-nvidia-mig-config