Life of a Message

To show how the HPE Ezmeral Data Fabric Streams concepts fit together, here is an example of the flow of one message from a producer to a consumer.

The Setup

Suppose that you are using HPE Ezmeral Data Fabric Streams as part of a system to monitor traffic in San Francisco. Your producers are sensors in streets, freeways, bridges, overpasses, and other infrastructure, as well as sensors reporting the weather in many different locations. Your consumers are various analytical and reporting tools.

In a volume in a data-fabric

cluster, you create the stream /somepath/traffic_monitoring. In that

stream, you create the topics traffic, infrastructure, and

weather_conditions.

Of all of the sensors (producers) that your system uses to monitor traffic, let us choose a

sensor that is under the pavement of Market Street and follow a message that it generates.

We will follow a message that is generated by this sensor and published in the

traffic topic.

Suppose that, when you created this topic, you created several partitions within it to help

spread the load among the different nodes in your data-fabric cluster and to help improve the performance of your

consumers. For simplicity, we will assume that the traffic topic has only

one partition.

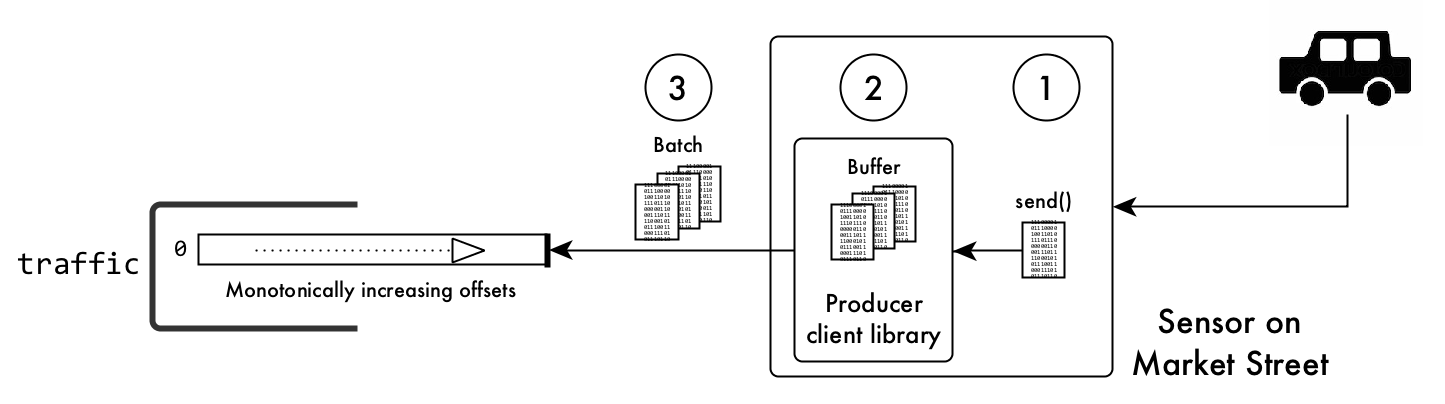

A Message Enters the System

- A car, one of hundreds on Market Street in morning rush-hour traffic, runs over the

sensor. This action triggers the sensor to send a message to a HPE Ezmeral Data Fabric Streams producer

client library. NOTEThis message might list geospatial coordinates, time, date, direction, weight, distance between front and rear wheels, and more. HPE Ezmeral Data Fabric Streams does not help you decide which data to collect.

- The client buffers the message.

- When the client has a large number of messages buffered (because other cars have

subsequently triggered the sensor) or after an interval of time has expired, the client

batches and sends the messages in the buffer. The message that we are following is

published in the partition along with the rest of the messages in the batch. When the

message is published, the HPE Ezmeral Data Fabric Streams server

assigns it the offset 001030 (which is only an example offset; real offsets are more

sophisticated). These messages being the most recent to be published, are written to the

head of the partition.

For a moment, suppose that this example used more than one partition. In that case, the sensor could influence how the HPE Ezmeral Data Fabric Streams server determines which messages go to which partition. In the example that we are following, the sensor could include a key with each message. The HPE Ezmeral Data Fabric Streams server would hash the key to determine the partition to place the messages received from the sensor. More information about how partitions are selected if there are more than one in a topic is explained later in this documentation.

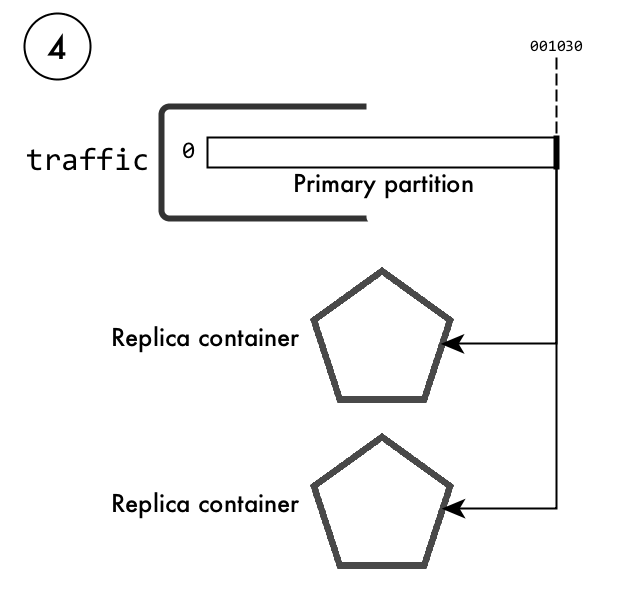

- Each partition and all of its messages are replicated. The server owning the primary

partition for the

traffictopic assigns the offset 001030 to the message that we are following, and replicates the message to replica containers (replication rules are controlled at the volume level) within the data-fabric cluster.Figure 2. Replication of the partition in the topic traffic

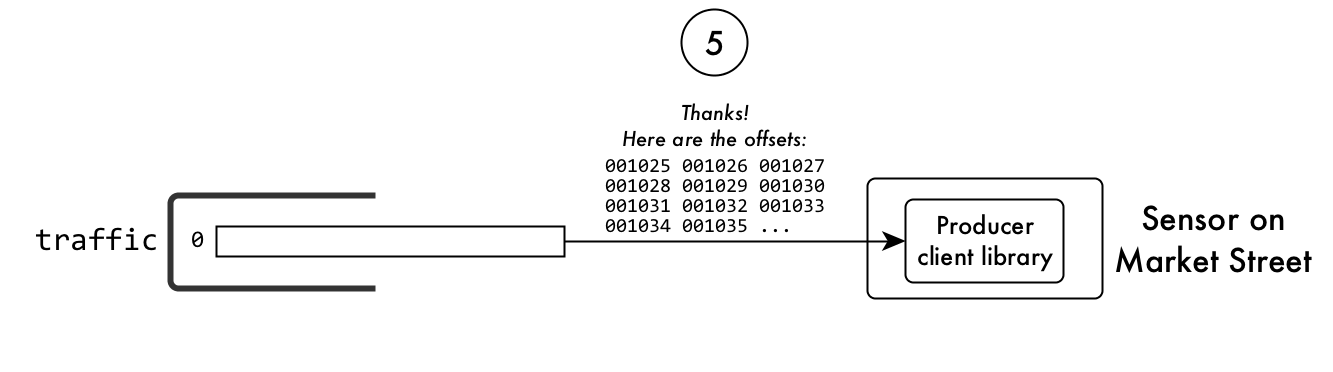

- The server acknowledges receiving the batch of messages and sends the offsets that it

assigned to them.

Figure 3. The server acknowledges receiving the messages

The Message is Read from the System

traffic topic. Many more consumers could subscribe to

it, too.

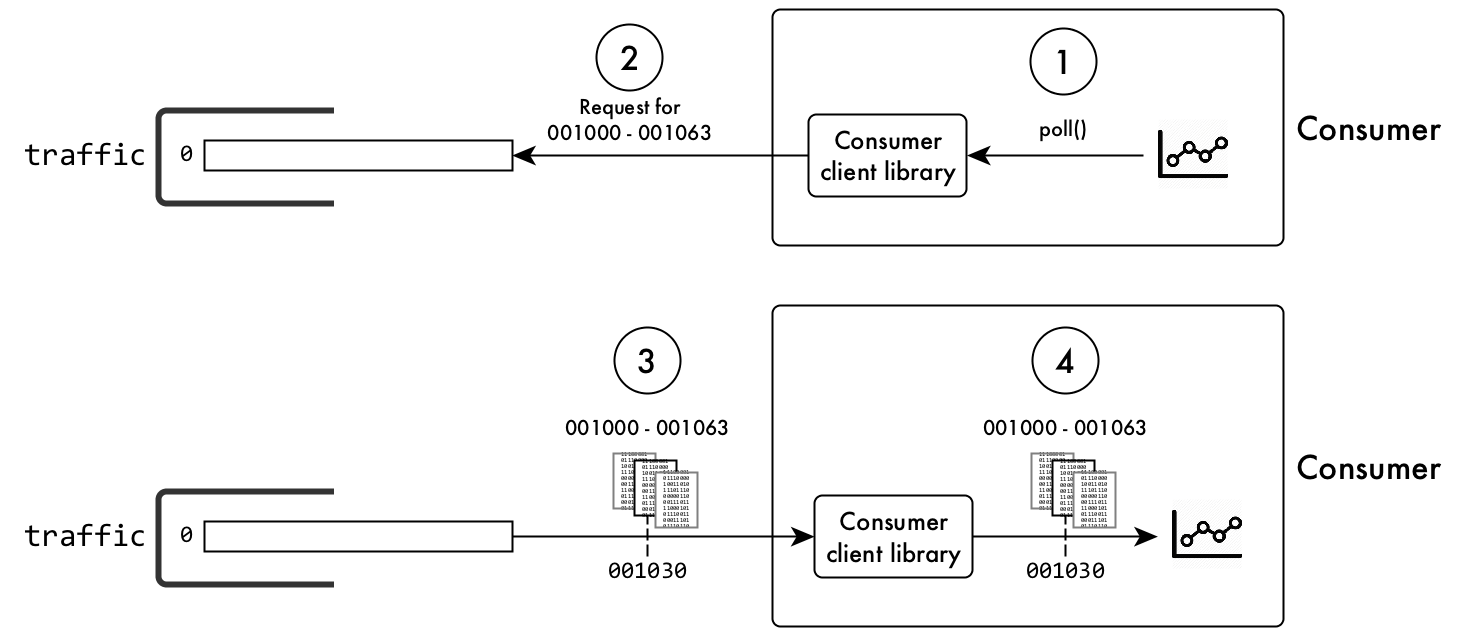

- The application issues a request to the consumer client library to poll the topic for messages that the application has not yet read.

- The client requests messages that are more recent than the consumer has yet read.

- The primary partition returns multiple messages to the client. The originals of the messages remain on the partition and are available to other consumers.

- The client passes the messages to the application, which extracts the data from them and processes it.

- If more unread messages remain in the partition, the process repeats from step 2.

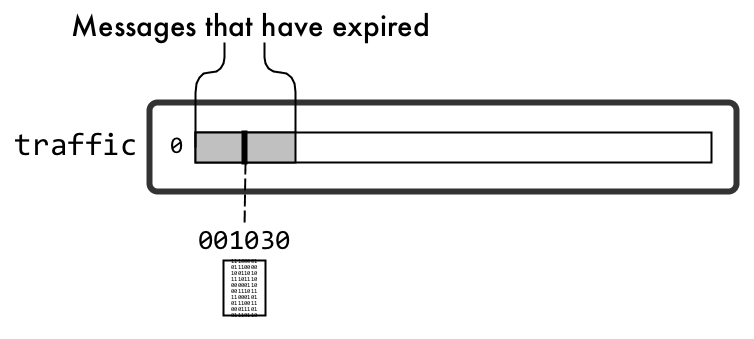

The Original Message is Deleted

Back in the cluster in San Francisco, messages are being continuously published to the

partition in the traffic topic. Message 001030 is much further in the

partition. More recent messages have filled the partition ahead of it.

When you created the stream, you set the time-to-live for messages to be six months. Message 001030 and messages around it have now been in the partition for that period, and are now expired. An automatic process eventually reclaims the disk space that message 001030 and the other expired messages are using.