Static and Dynamic Volume Provisioning Using Container Storage Interface (CSI) Storage Plugin

Explains static and dynamic volume provisioning using the CSI plugin.

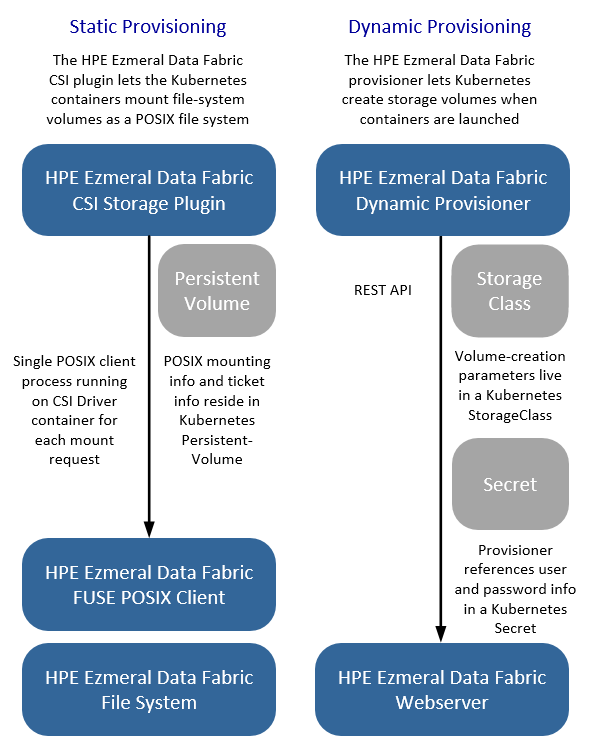

Kubernetes makes a distinction between static and dynamic provisioning of storage.

In static provisioning, a Data Fabric administrator first creates Data Fabric volumes (mount points) and then ensures that they are mounted, and a Kubernetes administrator exposes those Data Fabric mount points in Kubernetes through Kubernetes PersistentVolumes. In a typical static-provisioning scenario, a Pod author requests that a Kubernetes admin create a PersistentVolume that references an existing Data Fabric mount point with a dataset that the Pod author is interested in. This PersistentVolume references the CSI driver. The CSI Driver mounts and unmounts Data Fabric mount points for the requesting Pod. In addition, CSI supports the creation of a PersistentVolume directly by creating a PersistentVolumeClaim. The Pod author requests that a Kubernetes admin to create a PersistentVolume that points to the CSI driver and references an existing Data Fabric mount point.

In dynamic provisioning, a Kubernetes administrator creates a set of StorageClasses pointing to the CSI provisioner for Data Fabric. Each StorageClass has a predefined set of storage characteristics. Examples of these characteristics include the CLDB hosts, REST server hosts, provisioner secret name and namespace, Data Fabric volume name prefix, Data Fabric volume mount path, and volume advisory quota size. The Pod creator searches the predefined Storage Classes for the one that best matches the creator’s requirements. When the Pod references this StorageClass through a PersistentVolumeClaim, the StorageClass calls the CSI Provisioner for Data Fabric to allocate storage for the requesting Pod dynamically and creates the volume.

To leverage the Data Fabric file system with a Kubernetes cluster, you can create a PersistentVolume in Kubernetes.

The following diagram shows the two ways in which the PersistentVolume can be provisioned for the POSIX client. In the case of the Loopback NFS plugin, the Loopback NFS server performs the functions of the POSIX client shown in the diagram:

To accomplish static provisioning, the CSI Driver for Data Fabric for Kubernetes is deployed to all nodes in the Kubernetes cluster via a Kubernetes DaemonSet. The CSI Driver uses the Basic, which is the default, or the optional Platinum POSIX client to mount the Data Fabric file system. The information that the POSIX client uses to connect to Data Fabric is contained in a Kubernetes Volume or PersistentVolume. A Data Fabric ticket inside a Secret, referenced by the Kubernetes Volume or PersistentVolume specification, is used by the POSIX client to pass secure data to the file system.

To accomplish dynamic provisioning, the CSI provisioner is deployed as a StatefulSet in the Kubernetes cluster.

A Kubernetes Administrator must configure at least one storage class with Data Fabric parameters (for example, mirroring, snapshots, quotas, and other parameters) for use during creation of the Data Fabric volume. The storage class passes Data Fabric administrative credentials to the provisioner through a Kubernetes Secret. Security for the provisioner is handled through role-based access control (RBAC) in Kubernetes.