Architecture and CDC

This section provides an overview of how CDC works.

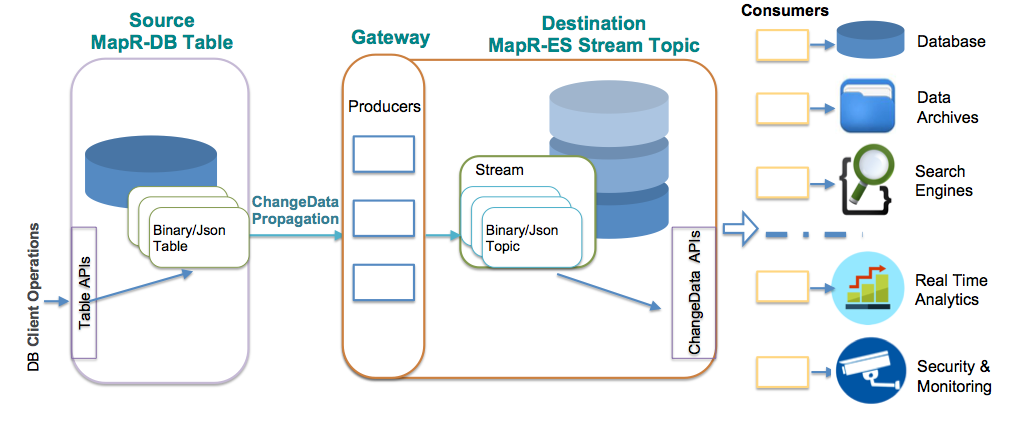

CDC uses a log-based data capture for the changed data records, propagates the data (from the source table) using replication remote procedural calls (RPCs) through an internal Data Fabric gateway and produces the data to a HPE Ezmeral Data Fabric Streams destination stream topic(s). Once data is received by the topic, the changed data records can be consumed by external applications. The consumer application registers the CDC Deserializer as its record value deserializer and pulls the topic data by using a Kafka API. The data changes can be read from the ChangeDataRecord through the OJAI ChangeData APIs. Consumers could be databases, data archives, search engines, or applications that perform real-time analytics, security, or monitoring.

How are the Change Data Records Propagated?

The propagation is accomplished by setting up a change log that establishes a relationship

between the source table and the destination stream. The change log can be setup by using

the Control System, maprcli, or REST. Each change log can be paused, resumed, and removed.

See Administering Change Data Capture and the maprcli

table changelog command for more information.

As data is changed on the source table (through CRUD operations), each changed data record is propagated (replicated) to an internal Data Fabric gateway. The order of when the data is produced to the stream topic is the same order of when the changed data records are replicated to the gateway. The data flow is one way, meaning, the flow is from a HPE Ezmeral Data Fabric Database source table to a HPE Ezmeral Data Fabric Streams destination stream topic(s).

What is the Impact of using Columns/Column Families?

When propagating a specific column family or column from a binary source table and a row is deleted, the destination stream topic shows only a deletion event for the specific column family or column. When propagating a specific column from a binary source table with its entire column family deleted, the destination stream topic shows only a deletion event for the specific column.

- If you delete fam0, fam1, and fam2, the change data event will be "delete fam0", "delete fam1" and "delete fam2".

- If you delete the row, the change data event will be "delete row".

- If you delete fam0, fam1, and fam2, the change data event will be "delete fam1:col1", "delete fam2".

- If you delete the row, the change data event will be "delete fam1:col1", "delete fam2".

Where is the Destination Stream Setup?

The destination HPE Ezmeral Data Fabric Streams stream can either be on the same cluster as the HPE Ezmeral Data Fabric Database source table or on a remote Data Fabric cluster. Where and how destination streams are setup depends on the purpose for using CDC.

If you are propagating changed data from a source table on a source cluster to a destination stream topic on a remote destination cluster, you must setup a gateway. Gateways are setup by installing the gateway on the destination cluster and specifying the gateway node(s) on the source cluster. See Administering Data Fabric Gateways and Configuring Gateways for Table and Stream Replication.

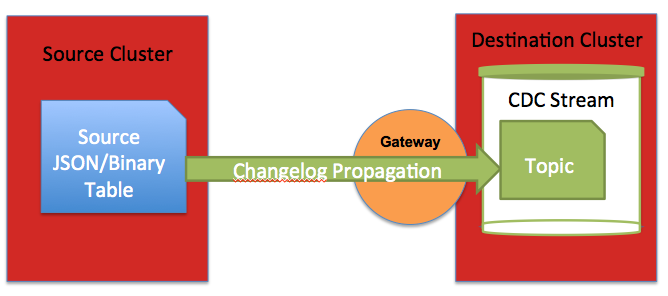

The following diagram shows a simple CDC data model, with one source table to one destination topic on one stream. Since this scenario has the destination stream topic on a remote destination cluster, you must setup and configure a gateway.