Authentication Mechanism for Releases 6.1.x and 6.2.0

Describes limitations of the authentication mechanism used in Releases 6.1.x and 6.2.0.

Overview of the MapR-SASL Authentication for Releases 6.1.x and 6.2.0

Hadoop and the ecosystem components supported by the HPE Ezmeral Data Fabric use tickets and MapR-SASL to authenticate to the Data Fabric core platform. ZooKeeper and most ecosystem components, such as Drill, Hive, Oozie, Spark, and other components use MapR-SASL to enable client and server communication.

Description of the Authentication Mechanism

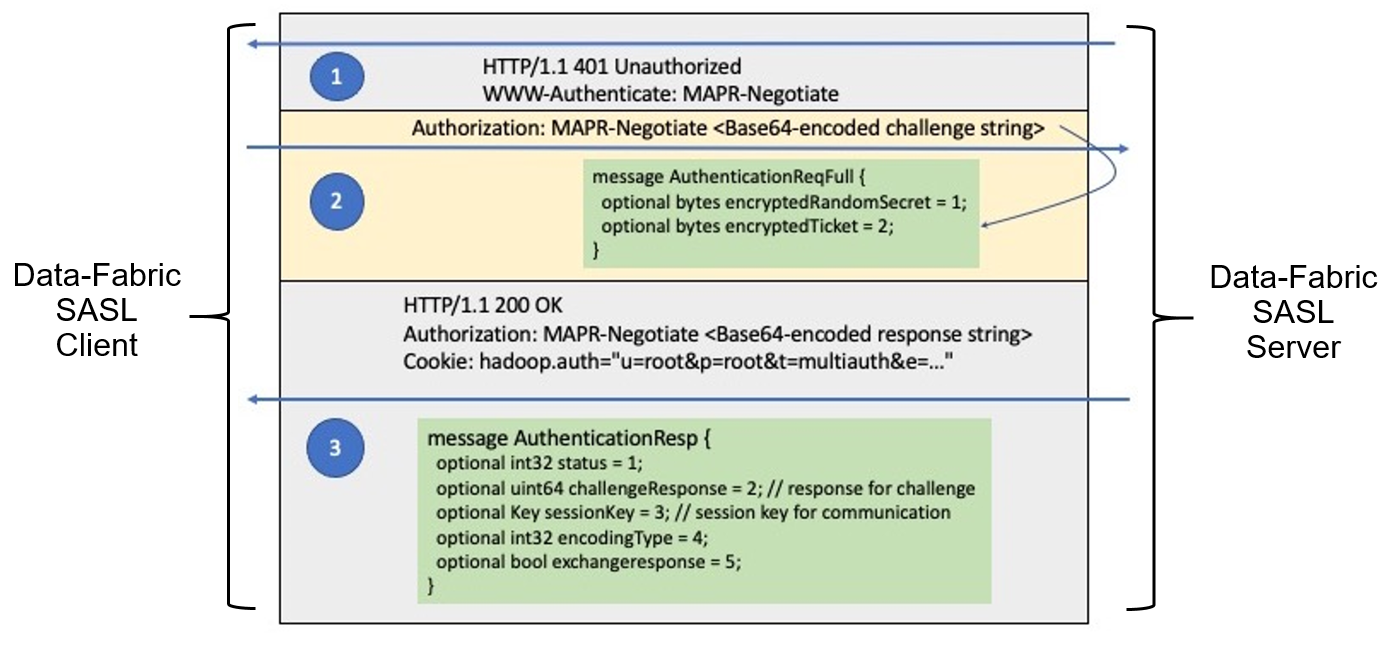

The MapR-SASL protocol, illustrated here, follows the RFC-7235

standard for HTTP/1.1 authentication:

Step 1

When a client sends a server a request for a resource, either without a token or with an

invalid (for example, expired) token, the server returns the HTTP/1.1 401

Unauthorized response to the client. The WWW-Authenticate

property is set to MAPR-Negotiate, indicating that MapR-SASL is supported.

If other authentication mechanisms are supported, multiple WWW-Authenticate

properties are returned, one for each mechanism.

Step 2

The SASL client invokes the MapR-SASL authentication handler, which in turn constructs an

AuthenticationReqFull request message containing the encrypted client

ticket and a random secret encrypted with the user key. Since there is no mechanism in the

SASL client to specify which ticket to use or which cluster the authentication request

should be sent to, the MapR-SASL authentication handler finds the default cluster in

${MAPR_HOME}/conf/mapr-clusters.conf. The authentication handler then

finds the ticket for the default cluster in one of the designated ticket locations, which

defaults to /tmp/maprticket_<uid>. It then serializes the

AuthenticationReqFull request message, encodes it in Base64 format, and

sends the request message to the CLDB of the default cluster over HTTPS in the

Authorization property.

Step 3

Upon receipt of the AuthenticationReqFull request message, the CLDB

decrypts the client ticket using the server key and validates the ticket to ensure it is not

in deny list or expired. The CLDB then extracts the user key from the ticket to decrypt the

random secret. It adds 1 to the challenge (random secret), returns the response to the

MapR-SASL authentication handler over HTTPS in the Authorization property.

Upon successful authentication, the MapR-SASL authentication handler returns the token to

the client in the Cookie property, since this is a Hadoop client. Different

clients can return tokens in different formats.

Limitations of the MapR-SASL Implementation Used in Releases 6.1.x and 6.2.0

- Keys used to decrypt tickets in the core platform are cluster specific. However, the upper layers have no concept of clusters and no way to specify which cluster the request is to be sent to. Therefore, this MapR-SASL implementation works only for the default cluster. Applications can be written using the client REST API to specify the destination cluster and overwrite the limitation of the default cluster, but other problems remain.

- Even if the MapR-SASL implementation is enhanced to be cluster-aware, single sign-on does not work in MapR-SASL because it is not aware of trust relationships. It requires the destination cluster ticket even when the source and destination clusters have a user-level trust relationship established. The application must not only know which cluster it is trying to contact, but also acquire tickets for every cluster that it needs to contact even if user-level trust relationships have been established between the source and destination clusters.

- If the CLDB node of a non-default cluster receives the request, the authentication fails because the user ticket cannot be decrypted. However, the CLDB node does not know why the decryption failed, and which cluster keys should be used.

- Tickets can be encrypted using various keys, such as the CLDB key or server key.

However, there is no indication in the

AuthenticationReqFullrequest message as to what key to use to decrypt the request. The CLDB always assumes that tickets are encrypted using the server key. This limits the ability of MapR-SASL to handle various kinds of tickets in future enhancements, such as OIDC tickets.

- Data Fabric ecosystem components often do not know which cluster a particular request is to be forwarded to, as this is determined by the lower layers. As such, the components typically use the ticket for the local cluster. That ticket is encrypted with a key that is available only to the local cluster itself, but not to remote clusters. Hence, MapR-SASL authentication fails.

- ODBC and JDBC secure connections work only for the local cluster.

- The implementation of various Data Fabric commands

relies on unsupported features. For example, if the

maprcli service listcommand is issued on a remote cluster,maprcliforwards the request to the ZooKeeper node on the remote cluster, giving the ticket to the local cluster for authentication credentials. The remote ZooKeeper node fails the request, since it is unable to decrypt the ticket. The ticket is encrypted with a key that is not available to the remote cluster. While this issue can be fixed easily by selecting the correct ticket for the remote cluster, this is not a long-term solution. It is equivalent to requiring a person to acquire a passport to every foreign country he wants to visit.

These issues pointed to the need for an architecture in which remote clusters can read local tickets with proper authorizations. This is akin to the real-world scenario where a person needs to acquire a passport only for the person's home country. Then the person is allowed to use the same passport to travel to foreign countries that have a pre-established relationship with the person's home country.

For solutions to these issues, see Authentication Enhancements for Release 7.0.0.