Best Practices for Running a Highly Available Cluster

Lists high availability cluster replication types, and the best practices for running such a cluster.

Data Fabric runs a wide variety of concurrent applications in a highly available fashion. Node failures do not have cluster-wide impact, and activities on other nodes in the cluster can continue normally. In parallel, Data Fabric components detect failures and automatically recover from them. During the recovery process, clients may experience latency, the duration of which depends on the nature of the failure.

Node Shutdown Instances

The cause of a service failure can be one of the following:

| Planned shutdown | A planned/controlled failure. In this case, Data Fabric is informed that a file server will be stopped. Data Fabric services use this information to improve recovery behavior. |

| Unexpected shutdown | Data Fabric services (file system, NFS server etc.,) are stopped. However, the host operating system continues to run and failure detection is fast. |

| Hard unplanned shutdown | A power off, network down, or some other kind of unplanned stop. A node is stopped in a way that it is no longer reachable. Packets sent to this node do not get an error response and failure is detected through network layer’s timeout mechanism. This results in longer failure detection times. |

In all of these instances, the recovery process typically involves detecting that a node is unreachable, and contacting another available node for the same piece of information (either for reads, writes, or administrative operations).

How file system and Associated Services Work

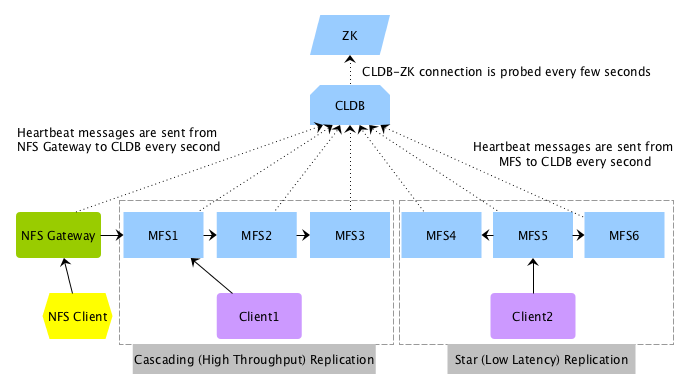

Let’s review how file system and associated services typically work, using the following illustration.

High-throughput or Cascading Replication Type: As shown in the illustration, the client, Client1, writes to a Data Fabric filesystem, MFS1, which in turn talks to MFS2, which in turn talks to MFS3 for cascading (high throughput) replication. The replication is inline and synchronous, which means MFS1 replies to the client only after it receives a response from MFS2. MFS2, in turn, only responds to MFS1 after MFS3 has replied to it. Client1 can read from any MFS, but write only to MFS1.

Low-latency or Star Replication Type: As shown in the illustration, the client, Client2, writes to MFS5. This illustration shows an example of star (low latency) replication where MFS5 replicates to both MFS4 and MFS6 in parallel. Again, the replication is inline and synchronous, which means that MFS5 responds to Client2 only after it has received responses from both MFS4 and MFS6.

Recommended Settings for Running a Cluster with Low Latency and Fast Failover Characteristics

A well designed cluster provides automatic failover for continuity throughout the stack. For an example of a large, high-availability Enterprise Edition cluster, see Example Cluster Designs. On a large cluster designed for high availability, services should be assigned according to the service layout guidelines. For more information, see Service Layout Guidelines for Large Clusters. In general, services, specifically CLDB and ZooKeeper, should be installed on separate nodes to prevent the failure of multiple services at the same time and to enable the cluster to recover quickly.