Registering HPE Ezmeral Data Fabric on Kubernetes as Tenant Storage

This procedure describes registering HPE Ezmeral Data Fabric on Kubernetes as Tenant storage.

Prerequisites

- The HPE Ezmeral Runtime Enterprise deployment must not have configured tenant storage. In the HPE Ezmeral Runtime Enterprise (ERE) Web UI, make sure that Tenant Storage is set to None, in Settings screen.

- Make sure that the HPE Ezmeral Data Fabric on Kubernetes cluster does not

have pre-existing Data Fabric volumes named in

the

tenant-<id>format. For more information, see Administering Volumes. You can also run the following command inside theadmincli-0pod:maprcli volume list -columns volumename | grep tenantIf any Data Fabric volume exists, you can conclude that the Data Fabric cluster is already registered as tenant storage. Contact Hewlett Packard Enterprise Support for technical assistance.

- An HPE Ezmeral Data Fabric on Kubernetes cluster must have been created. See HPE Ezmeral Data Fabric Documentation for more details on a HPE Ezmeral Data Fabric on Kubernetes cluster.

- Before proceeding to register HPE Ezmeral Data Fabric on Kubernetes, you must have created the Data Fabric cluster by performing upto Step 5: Summary of the procedure Creating a New Data Fabric Cluster.

- This procedure must be performed by the user who installed HPE Ezmeral Runtime Enterprise.

- This procedure may require 10 minutes or more per EPIC or Kubernetes host (Controller, Shadow Controller, Arbiter, Master, Worker, and so on).

- This procedure must be performed on the primary Controller host.

If Platform HA is enabled, in the ERE web UI, you can check Controllers page to confirm which controller is set Primary.

CAUTIONYou will not be able to delete this HPE Ezmeral Data Fabric on Kubernetes cluster after you have completed this step.

About this task

HPE Ezmeral Runtime Enterprise can connect to multiple Data Fabric storage deployments; however, only one

Data Fabric deployment can be registered as

tenant storage.

HPE Ezmeral Runtime Enterprise can connect to multiple Data Fabric storage deployments; however, only one

Data Fabric deployment can be registered as

tenant storage.- If you have an HPE Ezmeral Data Fabric on Kubernetes cluster outside the HPE Ezmeral Runtime Enterprise, and if you want to configure HPE Ezmeral Data Fabric on Kubernetes as tenant storage, continue with this procedure.

- If you have already selected another Data Fabric instance for tenant/persistent storage, do not proceed with this procedure. Contact Hewlett Packard Enterprise Support if you want to use a different Data Fabric instance as tenant storage

This procedure may require 10 minutes or more per EPIC or Kubernetes host [Controller, Shadow Controller, Arbiter, Master, Worker, and so on], as the registration procedure configures and deploys Data Fabric client software on each host.

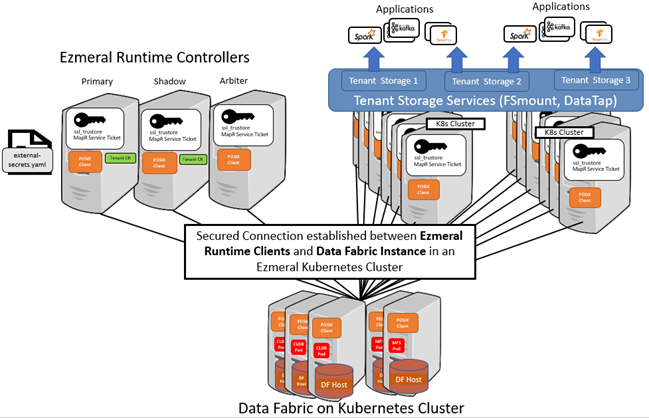

After Data Fabric registration is completed, the configuration will look as follows:

Procedure

-

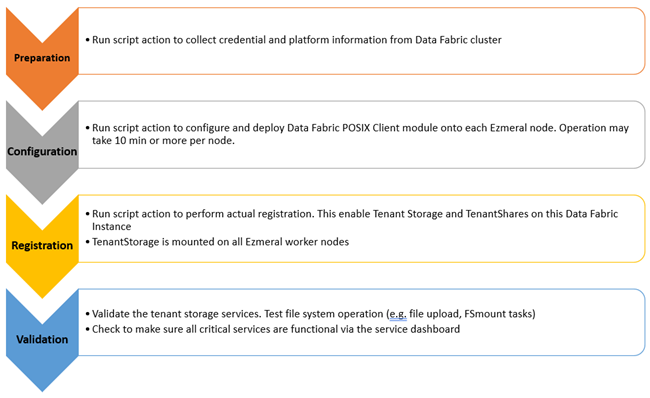

Preparation:

The

dftenant-manifestis needed for cluster registration (next section).- Proceed to Registration.

-

Configuration

Deploy a Data Fabric client on all hosts by executing the following command on the primary controller host:

LOG_FILE_PATH=/tmp/<log_file> MASTER_NODE_IP="<Kubernetes_Master_Node_IP_Address>" /opt/bluedata/bundles/hpe-cp-*/startscript.sh --action configure_dftenantsTheext_configure_dftenantsaction deploys HPE Ezmeral Data Fabric client modules (such as the POSIX Client), on HPE Ezmeral Runtime Enterprise hosts. -

Registration

To complete the registration procedure, initiate the

ext_register_dftenantsaction, using the following command:LOG_FILE_PATH=/tmp/<log_file> MASTER_NODE_IP="<Kubernetes_Master_Node_IP_Address>" /opt/bluedata/bundles/hpe-cp-*/startscript.sh --action register_dftenantsWhen prompted, enter the Site Administrator username and password. HPE Ezmeral Runtime Enterprise uses this information for REST API access to its management module.

The results of theregister_dftenantsaction are the following:register_dftenantscreates a volume, on the HPE Ezmeral Data Fabric on Kubernetes cluster, for each existing HPE Ezmeral Runtime Enterprise tenant. For a new tenant (created in the future), a tenant volume gets created automatically, on the HPE Ezmeral Data Fabric on Kubernetes cluster. The name of the volume in will betenant-<ID>, where <ID> is the number of the tenant.- The

register_dftenantsaction reconfigures Tenant Storage to use the HPE Ezmeral Data Fabric on Kubernetes cluster, for all future tenants. And:- TenantStorage and TenantShare will be created for all existing tenants on the Data Fabric cluster.

- For AI/ML tenants, the project repository will be changed to use a Data Fabric volume. However, data from the existing project repository will not be migrated.

- Both TenantShare and TenantStorage will be available for all tenants.

- The

register_dftenantsaction also reconfigures the following services:- Nagios, to track Data Fabric related client and mount services on the appropriate HPE Ezmeral Runtime Enterprise hosts.

- WebHDFSs, to enable browser-based file system operations, such as upload, mkdir, and so on

The file systems on the per-tenant volumes on the Data Fabric cluster are mounted, by the Data Fabric client on each node, under

/opt/bluedata/mapr/mnt/<cluster_name>/<ext_mapr_mount_dir>/<tenant-id>/, where:<cluster_name>is the name of the HPE Ezmeral Data Fabric on Kubernetes cluster.<ext_mapr_mount_dir>is specified in theext-dftenant-manifest. See Step 1.c.<tenant-id>is the unique identifier for the relevant tenant.

Future Kubernetes clusters created in the HPE Ezmeral Runtime Enterprise will have persistent volumes located in:

/opt/bluedata/mapr/mnt/<datafabric_cluster_name>/<ext_mapr_mount_dir>/The registered HPE Ezmeral Data Fabric on Kubernetes cluster will be the backing for Storage Classes of future Kubernetes Compute clusters, that are created in the HPE Ezmeral Runtime Enterprise The registration procedure does not modify the Storage Classes for Compute clusters, which existed before the registration.

-

Validation:

To confirm the success of the Registration, check the following

-

Check the output and/or logs of the

ext_configure_dftenantsandext_register_dftenantsactions. - On the HPE Ezmeral Runtime Enterprise Web UI, view the Tenant Storage tab on the System Settings page. Check that the information displayed on the screen is accurate for the HPE Ezmeral Data Fabric on Kubernetes cluster.

-

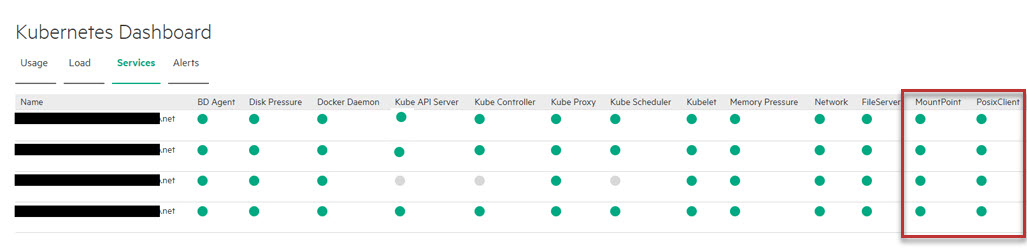

On the HPE Ezmeral Runtime Enterprise, view the

Kubernetes and EPIC

Dashboards, and check that the POSIX Client and Mount Path services on

all hosts are in normal state.

- On the HPE Ezmeral Runtime Enterprise Web UI, verify that, you are able to browse Tenant Storage on an existing tenant. If wanted, try uploading a file to a directory under Tenant Storage and reading the uploaded file. See Uploading and Downloading Files for more details.

-

Check the output and/or logs of the

- Proceed to Step 7: Fine-Tuning the Cluster of the procedure Creating a New Data Fabric Cluster.