Connecting to Spark Operator from a KubeDirector Notebook Applications

This topic describes how to submit Spark applications using the EZMLLib library on KubeDirector notebook application.

The EZMLLib library includes the

from ezmlib.spark import submit, delete, logs API which sets the

configurations of your Spark applications.

You can submit, delete, and check logs of the Spark applications using the API.

Submit Spark Applications

You can submit the Spark applications using two different

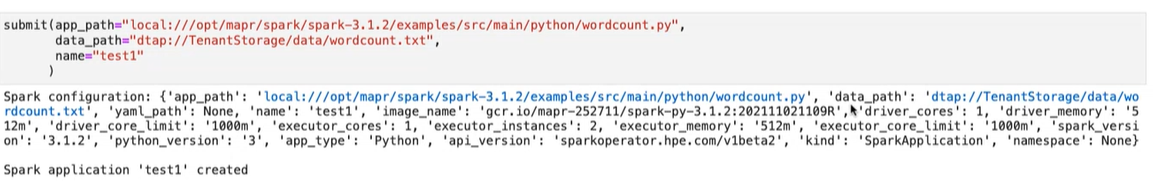

submit command:- Using Python files

- Run the following

command:

submit(app_path="<path-to-python-application-file>", data_path="<path-to-data-source-for-the-application>", name="<application-name>" ) - Using YAML files

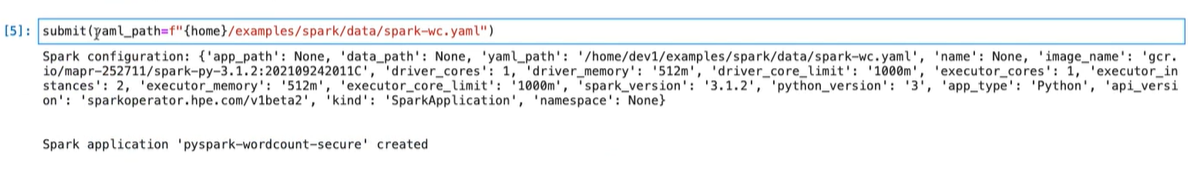

- Run the following

command:

submit(yaml_path=f"<path-to-yaml-file>")

Check Logs of the Spark Applications

After you submit the Spark applications, you can check the both events logs and regular

logs using logs.

- Check events logs

- Run

logs("<application-name>", events=True). - Check regular logs

- Run

logs("<application-name>").

Delete Spark Applications

You can delete the Spark applications using

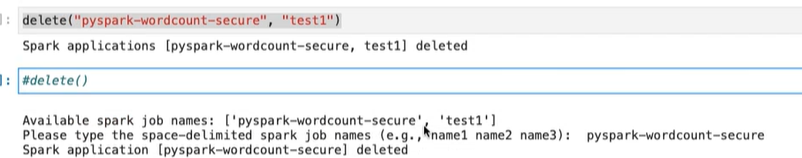

delete.- Delete multiple applications

- Run

delete("<application_1>", "<application_2>"). - List available applications and delete applications

- Run

delete()and enter the space-delimited Spark applications name.

For example:

To learn more about using EZMLLib, see Notebook ezmllib Functions.