HDFS DataTap Cross-Realm Kerberos Authentication

Cross-realm Kerberos authentication allows the users of one Kerberos realm to access

services that reside inside a different Kerberos realm. To do this, both realms must

share a key for the same principal, and both keys must share the same version number.

For example, to allow a user in REALM_A to access REALM_B, then both realms must share a key for a principal named krbtgt/REALM_B@REALM_A. This key is unidirectional; for a user in REALM_B to access REALM_A, both realms must share

a key for krbtgt/REALM_A@REALM_B.

Most of the responsibilities of the remote KDC server can be offloaded to a local KDC

that Kerberizes the compute clusters within a tenant, while the DataTap uses a

KDC server specific to the enterprise datalake. The users of the cluster

come from the existing enterprise central KDC. Assuming that the enterprise has a

network DNS name of ENTERPRISE.COM, the three Kerberos realms could

be named as follows:

- KDC Realm CORP.ENTERPRISE.COM: This central KDC realm manages the

users who run jobs in the Hadoop compute clusters. For example,

user@CORP.ENTERPRISE.COM. - KDC Realm CP.ENTERPRISE.COM: This local KDC realm Kerberizes the Hadoop compute clusters.

- KDC Realm: DATALAKE.ENTERPRISE.COM: This KDC Kerberizes the remote HDFS file

system accessed via DataTap. For example,

dtap://remotedata.

In this example, the user user@CORP.ENTERPRISE.COM can run jobs in the

compute cluster that belongs to the CP.ENTERPRISE.COM realm, and jobs

can access data residing in dtap://remotedata in the DATALAKE.ENTERPRISE.COM realm. This scenario requires a one-way Kerberos trust

relationship between realms CORP.ENTERPRISE.COM and DATALAKE.ENTERPRISE.COM and CP.ENTERPRISE.COM, as well as a

one-way trust relationship between realm DATALAKE.ENTERPRISE.COM and

realm CP.ENTERPRISE.COM. More specifically:

- CP.ENTERPRISE.COM trusts CORP.ENTERPRISE.COM: The user

user@CORP.ENTERPRISE.COMneeds to be able to access services within the compute cluster in order to perform tasks such as submitting jobs to the compute cluster YARN Resource Manager and writing the job history to the local HDFS. - DATALAKE.ENTERPRISE.COM trusts CORP.ENTERPRISE.COM: The user

user@CORP.ENTERPRISE.COMneeds to be able to accessdtap://remotedata/from the compute cluster. - DATALAKE.ENTERPRISE.COM trusts CP.ENTERPRISE.COM: When the user

user@CORP.ENTERPRISE.COMaccesses dtap://remotedata/ from the compute cluster to run jobs, theYARN/rmuser of the compute cluster (CP.ENTERPRISE.COM) also needs to be able to accessdtap://remotedata/to get partition information and to renew the HDFS delegation token.

This article describes the following:

- Access: See Accessing a Passthrough DataTap with Cross-Realm Authentication.

- Authentication: See One-Way Cross-Realm Authentication.

- Using Ambari to configure cross-realm authentication: See Using Ambari to Configure /etc/krb5.conf.

- Troubleshooting: See Debugging.

Accessing a Passthrough DataTap with Cross-Realm Authentication

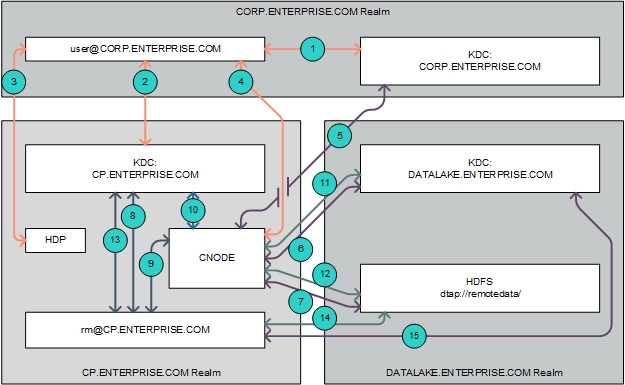

This diagram displays the high-level authentication flow for accessing a passthrough DataTap with cross-realm authentication:

In this example, the user user@CORP.ENTERPRISE.COM submits a job

to the cluster that is Kerberized by the KDC realm CP.ENTERPRISE.COM and accesses data stored on a remote HDFS file system

Kerberized by the KDC realm DATALAKE.ENTERPRISE.COM using the

following flow (numbers correspond to the callouts in the preceding diagram):

- The user

user@CORP.ENTERPRISE.COMwants to send a job to a service in KDC realmCP.ENTERPRISE.COM. This realm is different from the one that the user belongs to. Normally, this is not allowed. However, since there is a trust relationship where realmCP.ENTERPRISE.COMtrusts realmCORP.ENTERPRISE.COM, the useruser@CORP.ENTERPRISE.COMis able to request a temporary service ticket from its home realm (CORP.ENTERPRISE.COM) that will be valid when submitted to the TGS (Ticket Granting Service) of the foreign realm,CP.ENTERPRISE.COM. - The user

user@CORP.ENTERPRISE.COMsubmits the temporary service ticket issued by theCORP.ENTERPRISE.COMrealm to the Ticket Granting Service (TGS) of theCP.ENTERPRISE.COMrealm. - The user

user@CORP.ENTERPRISE.COMthen submits this service ticket to the YARN service in the HDP compute cluster in order to run the job. - When the job that user

user@CORP.ENTERPRISE.COMsubmitted needs to get data from the remote HDFS, the DataTap forwards the user's TGT to the deployment CNODE service. The CNODE service finds that the realm of the TGT foruser@CORP.ENTERPRISER.COMisCORP.ENTERPRISE.COMand not the same as KDC realmDATALAKE.ENTERPRISE.COM, which is the one used by the HDFS file system configured for the DataTap. - The CNODE service obtains a temporary service ticket from the

CORP.ENTERPRISE.COMKDC server. - Since there is a trust relationship where realm

DATALAKE.ENTERPRISE.COMtrusts realmCORP.ENTERPRISE.COM, the CNODE service then obtains a service ticket from realmDATALAKE.ENTERPRISE.COMCORP.ENTERPRISE.COMserver. - The CNODE service uses the

DATALAKE.ENTERRPRISE.COMservice ticket to authenticate with the NameNode service of the remote HDFS file system and access the data as useruser@CORP.ENTERPRISE.COM. - While running the job submitted by

user@CORP.ENTERPRISE.COMto the cluster, the YARN Resource Manager service will need to access the remote HDFS file system in order to get partition information. The Resource Manager service runs with principalrm@CP.ENTERPRISE.COM. In order to access the remote HDFS, it will need to obtain a Ticket-Granting Ticket (TGT) from the localCP.ENTERPRISE.COMKDC server. - The user

rm@CP.ENTERPRIE.COMthen accesses the DataTap. The DataTap forwards the user's TGT to the deployment CNODE service. The CNODE service finds that the realm of the TGT forrm@CP.ENTERPRISE.COMis not the same as KDC realmDATALAKE.ENTERPRISE.COM, which is the one used by the HDFS service configured for the DataTap. - The CNODE service obtains a temporary service ticket from the

CP.ENTERPRISE.COMKDC server. - Since there is a trust relationship where realm

DATALAKE.ENTERPRISE.COMtrusts realmCP.ENTERPRISE.COM, the CNODE service then obtains a service ticket from realmDATALAKE.ENTERPRIE.COM, the KDC protecting access to the remote HDFS file system based on the temporary service ticket that was issued by the realmCP.ENTERPRISE.COMserver. - The CNODE service uses the service ticket to authenticate with the NameNode

Service of the remote HDFS file system and access the data as user

rm@CP.ENTERPRISE.COM. - When the Resource Manager it does not use the CNODE service when it needs to

renew an HDFS delegation token. Instead, the

rm@CP.ENTERPRISE.COMuser requests a temporary service ticket for theDATALAKE.ENTERPRISE.COMrealm from the local (CP.ENTERPRISE.COM) KDC server. Since there is a trust relationship between realmsCP.ENTERPRISE.COMandDATALAKE.ENTERPRISE.COM, theCP.ENTERPRISE.COMKDC server is able to issue the temporary service ticket. - The

rm@CP.ENTERPRISE.COMuser submits the temporary service ticket to the KDC server of theDATALAKE.ENTERPRISE.COMrealm and gets a service ticket for the NameNode service of the remote HDFS file system. - The

rm@CP.ENTERPRISE.COMuser can then use the service ticket to renew the HDFS delegation token with the NameNode service of the remote HDFS.

One-Way Cross-Realm Authentication

Allowing the user user@CORP.ENTERPRISE.COM to run jobs on the

Hadoop compute cluster requires configuring one-way cross-realm authentication

between the realms CORP.ENTERPRISE.COM and CP.ENTERPRISE.COM. Further, allowing the user user@CORP.ENTERPRISER.COM to use the DataTap dtap://remotedata within the Hadoop compute cluster requires configuring

one-way cross-realm authentication between the realms CORP.ENTERPRISE.COM and DATALAKE.ENTERPRISE.COM, and

between the realms CP.ENTERPRISE.COM and DATALAKE.ENTERPRISE.COM. In other words, the realm DATALAKE.ENTERPRISE.COM trusts the realms CP.ENTERPRISE.COM and CORP.ENTERPRISE.COM, and the realm

CP.ENTERPRISE.COM trusts realm CORP.ENTERPRISE.COM.

To enable these one-way cross-realm trust relationships, you will need to configure the following:

- KDC: See Step 1: KDC Configuration.

- Host: See Step 2: Host Configuration.

- DataTap: See Step 3: Remote DataTap Configuration.

- Cluster: See Step 4: Hadoop Compute Cluster Configuration.

See Using Ambari to Configure /etc/krb5.conf for information on using the Ambari interface to configure cross-realm authentication.

Step 1: KDC Configuration

Configure the KDCs as follows:

-

On the KDC server for realm DATALAKE.ENTERPRISE.COM add the following two principals:

krbtgt/DATALAKE.ENTERPRISE.COM@CORP.ENTERPRISE.COM krbtgt/DATALAKE.ENTERPRISE.COM@CP.ENTERPRISE.COM -

On the KDC server for realm CP.ENTERPRISE.COM, add the following two principals:

krbtgt/DATALAKE.ENTERPRISE.COM@CP.ENTERPRISE.COM krbtgt/CP.ENTERPRISE.COM@CORP.ENTERPRISE.COM -

On the KDC server for realm CORP.ENTERPRISE.COM, add the following two principals:

krbtgt/DATALAKE.ENTERPRISE.COM@CORP.ENTERPRISE.COM krbtgt/CP.ENTERPRISE.COM@CORP.ENTERPRISE.COM

Step 2: Host Configuration

On the host(s) where the CNODE service is running, modify the [realms] and [domain_realm] sections of the /etc/bluedata/krb5.conf file to add the CORP.ENTERPRISE.COM, CP.ENTERPRISE.COMDATALAKE.ENTERPRISE.COM realms. For example:

[root@yav-028 ~]# !cat

cat /etc/bluedata/krb5.conf

[logging]

default = FILE:/var/log/krb5/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = CP.ENTERPRISE.COM

dns_loookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

CP.ENTERPRISE.COM = {

kdc = kerberos.cp.enterprise.com

}

CORP.ENTERPRISE.COM = {

kdc = kerberos.corp.enterprise.com

}

DATALAKE.ENTERPRISE.COM = {

kdc = kerberos.datalake.enterprise.com

}

[domain_realm]

.cp.enterprise.com = CP.ENTERPRISE.COM

.datalake.enterprise.com = DATALAKE.ENTERPRISE.COM

.corp.enterprise.com = CORP.ENTERPRISE.COM

[root@yav-28 ~]#

Step 3: Remote DataTap Configuration

On the remote HDFS NameNode service pointed to by the DataTap dtap://remotedata/, append the auth_to_local on the

Hadoop cluster as follows:

RULE:[1:$1@$0](ambari-qa-hdp@epic.ENTERPRISE.COM)s/.*/ambari-qa/

RULE:[1:$1@$0](hbase-hdp@epic.ENTERPRISE.COM)s/.*/hbase/

RULE:[1:$1@$0](hdfs-hdp@epic.ENTERPRISE.COM)s/.*/hdfs/

RULE:[1:$1@$0](.*@CP.ENTERPRISE.COM)s/@.*//

RULE:[2:$1@$0](dn@CP.ENTERPRISE.COM)s/.*/hdfs/

RULE:[2:$1@$0](hbase@CP.ENTERPRISE.COM)s/.*/hbase/

RULE:[2:$1@$0](hive@CP.ENTERPRISE.COM)s/.*/hive/

RULE:[2:$1@$0](jhs@CP.ENTERPRISE.COM)s/.*/mapred/

RULE:[2:$1@$0](nm@CP.ENTERPRISE.COM)s/.*/yarn/

RULE:[2:$1@$0](nn@CP.ENTERPRISE.COM)s/.*/hdfs/

RULE:[2:$1@$0](rm@CP.ENTERPRISE.COM)s/.*/yarn/

RULE:[2:$1@$0](yarn@CP.ENTERPRISE.COM)s/.*/yarn/

RULE:[1:$1@$0](.*@CORP.ENTERPRISE.COM)s/@.*//

DEFAULTStep 4: Hadoop Compute Cluster Configuration

To configure the Hadoop compute cluster:

- Modify the

/etc/krb5.conffile on each of the virtual nodes, as follows:[realms] CP.ENTERPRISE.COM = { kdc = kerberos.cp.enterprise.com } CORP.ENTERPRISE.COM = { kdc = kerberos.corp.enterprise.com } DATALAKE.ENTERPRISE.COM = { kdc=kerberos.datalake.enterprise.com } [domain_realm] .cp.enterprise.com = CP.ENTERPRISE.COM .datalake.enterprise.com = DATALAKE.ENTERPRISE.COM .corp.enterprise.com = CORP.ENTERPRISE.COM -

Users in the realm

CORP.ENTERPRISE.COMalso need access to the HDFS file system in the Hadoop compute cluster. Enable this by adding the following rule to thehadoop.security.auth_to_localconfiguration file:RULE:[1:$1@$0](.*@CORP.ENTERPRISE.COM)s/@.*// - Restart the Hadoop services once you have finished making these changes. Do not

restart the Kerberos service, because Ambari will overwrite the modified

/etc/krb5.conffile with the original version when it finds a mismatch.

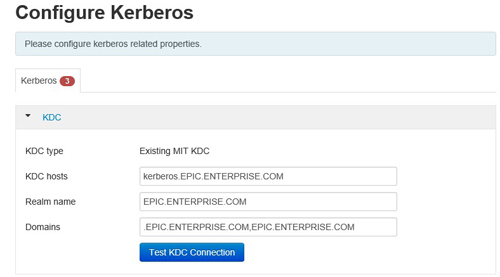

Using Ambari to Configure /etc/krb5.conf

You may modify the /etc/krb5.conf file using the Ambari interface

by selecting Admin>Kerberos>Configs. The advantage of using Ambari to

modify the /etc/krb5.conf file is that you can freely restart all

services.

Here, the Domains field is used to map server host names to the name of the Kerberos realm:

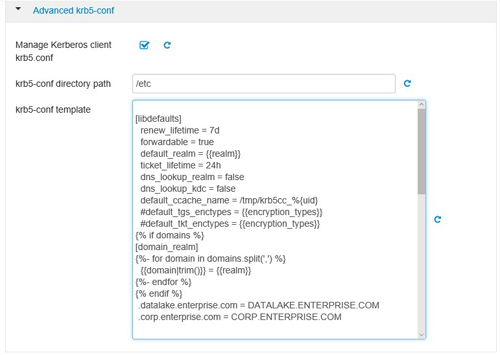

The krb5-conf template field allows you to append additional server host names to the realm name mapping:

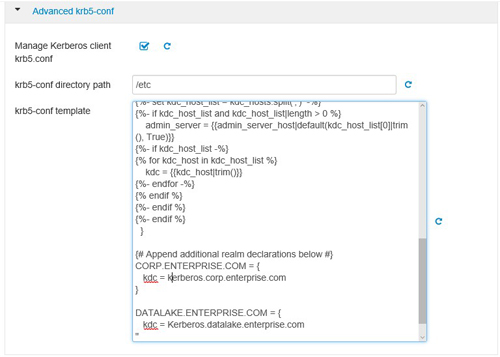

You may also append additional realm/KDC declarations in the krb5-conf template field.

Debugging

If a failure occurs while trying to access the remote HDFS storage resource using the DataTap, you may try accessing the namenode of the remote HDFS storage resource directly.

You may view DataTap configuration using the Edit DataTap screen, as described in Editing an Existing DataTap.

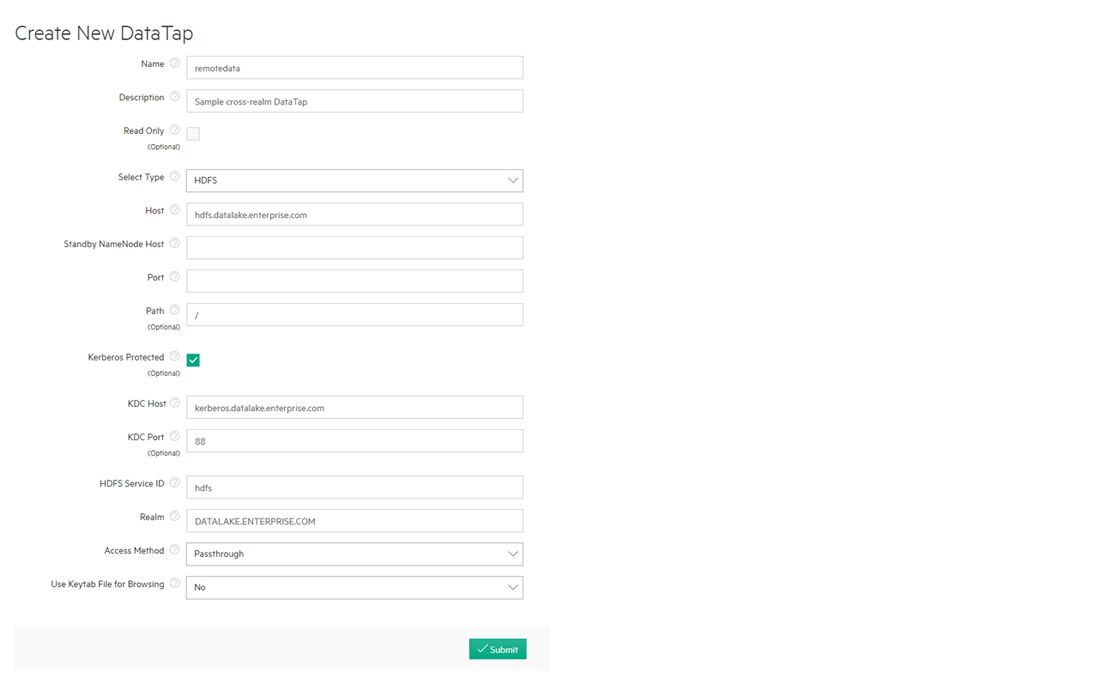

This image shows a sample central dtap://remotedata/

configuration:

To test the configuration, log into any node in the Hadoop compute cluster and

execute the kinit command to create a KDC session. The user you

are logged in as must be able to be authenticated against either the CORP.ENTERPRISE.COM or the CP.ENTERPRISE.COM KDC realms.

Once the kinit completes successfully, you should be able to

access the namenode of the remote HDFS storage resource directly, without involving

the deployment CNODE service, by executing the command bluedata-1 ~]$

hdfs dfs -ls hdfs://hdfs.datalake.enterprise.com/.

- If this command completes successfully, then test accessing the namenode of the

remote HDFS file system via the deployment CNODE service and DataTap by

executing the command

bluedata-1 ~]$ dfs -ls dtap://remotedata/. - If either of these commands fails, then there is an error in the KDC/HDP/HDFS configuration that must be resolved.

The following commands enable HDFS client debugging. Execute these commands before

executing the hdfs dfs -ls command in order to log additional

output:

export HADOOP_ROOT_LOGGER=DEBUG,console

export HADOOP_OPTS="-Dsun.security.krb5.debug=true -

Djavax.net.debug=ssl"