GPU and MIG Support

This topic provides information about support for NVIDIA GPU and MIG devices on HPE Ezmeral Runtime Enterprise.

GPU Support

HPE Ezmeral Runtime Enterprise supports making NVIDIA Data Center CUDA GPU devices available to containers or virtual nodes for use in CUDA applications.

- For information about the GPU devices supported by HPE Ezmeral Runtime Enterprise, see Support Matrixes.

- NVIDIA driver version 470.57.02 or later is required on hosts that have GPUs, regardless of whether those GPUs support Multi-Instance GPU (MIG).

- For information about the available device versions, see CUDA CPUs.

HPE Ezmeral Runtime Enterprise supports GPUs on Kubernetes nodes. The underlying hosts must be running an operating system and version that is supported on the corresponding version of HPE Ezmeral Runtime Enterprise. See OS Support.

Support for MIG-enabled devices is subject to additional requirements and restrictions. See MIG Support.

HPE Ezmeral Runtime Enterprise 5.3.5 and later releases deploy updated versions of the NVIDIA runtime and other required NVIDIA packages, and has changed the node label used to identify hosts that have GPU devices. When upgrading from a release of HPE Ezmeral Runtime Enterprise prior to 5.3.5, you must remove hosts that have GPUs (regardless of whether they are MIG-enabled) from HPE Ezmeral Runtime Enterprise before the upgrade, and then add those hosts after the upgrade to HPE Ezmeral Runtime Enterprise is complete.

For more information about using GPU resources in Kubernetes pods in HPE Ezmeral Runtime Enterprise, see Using GPUs in Kubernetes Pods.

MIG Support

The Multi-Instance GPU (MIG) feature from NVIDIA virtualizes the GPU such that applications can use a fraction of a GPU to optimize resource usage and to provide workload isolation.

HPE Ezmeral Runtime Enterprise supports MIG as follows:

- NVIDIA A100 MIG instances are supported on Kubernetes Worker hosts running RHEL 7.x , and SLES as listed in OS Support.

- MIG support requires NVIDIA driver version 470.57.02 or later.

-

On hosts that have multiple A100 GPU devices, each GPU device can have a different MIG configuration.

For example, on a system that has four A100 GPUs, the MIG configurations might be as follows:

- GPU 0: MIG disabled

- GPU 1: 7 MIG 1g.5gb devices

- GPU 2: 3 MIG 2g.10gb devices

- GPU 3: 1 MIG 4g.20gb, 1 MIG 2g.10gb, 1 MIG 1g.5gb

- The NVIDIA GPU operator is not supported.

- No GPU metrics on A100 MIG instances are available. NVIDIA recommends using DCGM-Exporter. (link opens an external website in a new browser tab or window).

- Information about hosts listed on the Host(s) Info tab of the Kubernetes Cluster Details screen includes the number of GPU devices. The More Info link displays information about the MIG instances.

- The NVIDIA device plugin version 0.9.0, which is deployed by the

nvidia-pluginKubernetes add-on, does not support multiple compute instances (CI) for the same GPU instance (GI). Therefore, do not configure MIG with profiles that start with Xc, such as 1c.3g.20gb or 2c.3g.20gb. - As stated in the NVIDIA Multi-Instance GPU User Guide, "MIG supports running CUDA applications by specifying

the CUDA device on which the application should be run. With CUDA 11, only

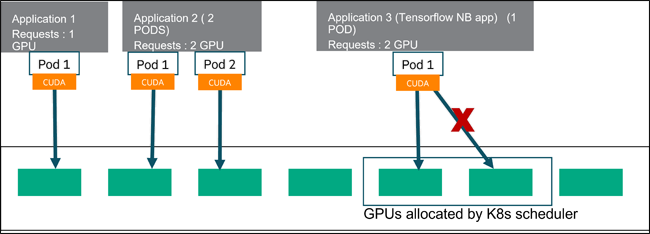

enumeration of a single MIG instance is supported.” This restriction means that

applications that use CUDA can use only the first MIG device applied to a

pod.

For example, consider the case in which an A100 GPU is configured with 7 MIG devices, with Pod1 assigned to MIG device 0 and Pod2 assigned to MIG device 1. Then, a Tensorflow notebook application pod is created, and that pod specifies two GPUs. The following occurs:

- The new pod is assigned MIG devices 2 and 3.

- When invoked from inside the pod, the

nvidia-smi -Lcommand returns two devices, indexed as 0 and 1.For example:

kubectl exec -n tenant1 tf-gpu-nb-controller-mvz7p-0 -- nvidia-smi -L GPU 0: A100-SXM4-40GB (UUID: GPU-5d5ba0d6-d33d-2b2c-524d-9e3d8d2b8a77) MIG 1g.5gb Device 0: (UUID: MIG-c6d4f1ef-42e4-5de3-91c7-45d71c87eb3f) MIG 1g.5gb Device 1: (UUID: MIG-cba663e8-9bed-5b25-b243-5985ef7c9beb)

- However, the Tensorflow

tf.config.list_physical_devices('GPU')request returns only one device:[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

- Quotas on (tenant) namespaces for GPUs are applied by the

nvidia.com/gpuspecifier, which applies to physical GPUs and MIG instances insinglestrategy only. For example, specifying a quota of three devices of 1g.5gb is not supported.

For more information about the MIG feature, including application considerations, see the following from NVIDIA (links open an external website in a new browser window or tab):

Host GPU Driver Compatibility

The host OS NVIDIA driver must be compatible with the CUDA library version required by the application.

For example:

- Tensorflow has information about tested build configurations for GPUs, which includes version compatibility information for Python, CUDA, and so forth.

- The KubeDirector Notebook application that is included with HPE Ezmeral Runtime Enterprise 5.4.x releases is installed with CUDA version 11.4.3. The Python Training and Python Inference applications also use CUDA 11.x.

The driver and CUDA package bundles from NVIDIA may not support every GPU listed here CUDA CPUs (link opens an external website in a new browser tab or window). You might need to download and install the driver and compatible CUDA toolkit for your specific GPU model separately.

For information about requirements for MIG support, see MIG Support.

CUDA Toolkit

For RHEL and SLES hosts, you can download and install an OS-specific CUDA toolkit (link opens an external website in a new browser tab or window). The toolkit can be useful for building applications. The toolkit might not be needed on the host itself.

The NVIDIA driver version determines the supported CUDA toolkit versions, as described in CUDA Toolkit and Compatible Driver Versions (link opens an external website in a new browser tab or window).

You can choose the NVIDIA driver version when configuring on-premises resources. When you add Amazon EC2 hosts with GPUs (such as in a hybrid deployment), then the NVIDIA driver is installed as part of the AMI for the EC2 instance that supports each node.

Deploying GPUs in HPE Ezmeral Runtime Enterprise

For information about deploying GPU and MIG in HPE Ezmeral Runtime Enterprise, see the following:

GPU and MIG Resources in Kubernetes Applications

For information about using GPU resources in Kubernetes applications and pods, see Using GPUs in Kubernetes Pods.