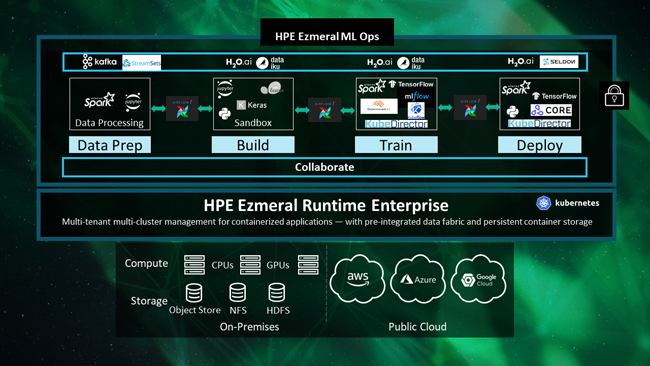

HPE Ezmeral ML Ops

The topics in this section provide information about machine learning operations (ML Ops/MLOps) using HPE Ezmeral ML Ops in HPE Ezmeral Runtime Enterprise. (Not available with HPE Ezmeral Runtime Enterprise Essentials.)

About HPE Ezmeral ML Ops

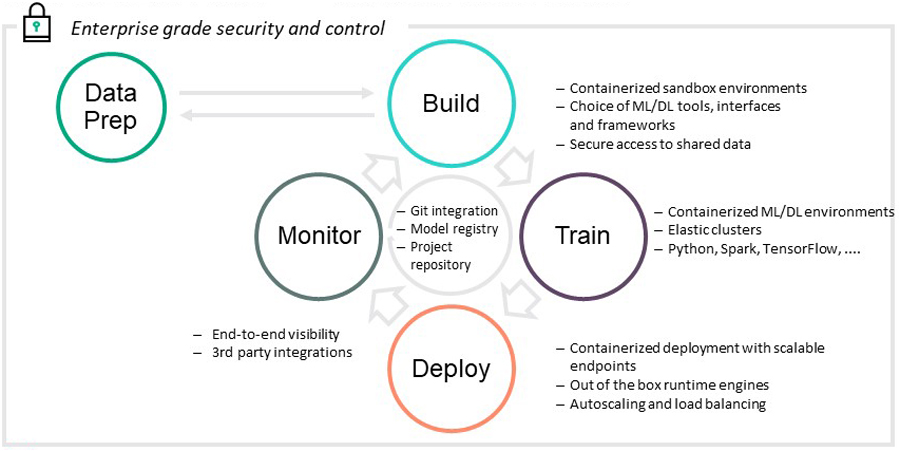

HPE Ezmeral ML Ops brings the power of Kubernetes pods and containers to the entire machine learning lifecycle to allow you to build, train, deploy, and monitor machine learning (ML) and deep learning (DL) models. HPE Ezmeral ML Ops supports sandbox development (notebooks), distributed training, and the deployment and monitoring of trained models in production. Project repository, source control, and model registry features allow seamless collaboration.

Features by ML Lifecycle Stage

With HPE Ezmeral ML Ops, data scientists can spin up containerized environments on scalable compute clusters with their choice of machine learning tools and frameworks for model development. When the model is ready for deployment, containerized endpoints with automatic scaling and load balancing are provided to handle variable workloads and optimize resource usage.

Some of the specific features supplied at each stage of the machine learning lifecycle include:

-

Build:

- Containerized sandbox environments

- Choice of ML/DL tools, interfaces, and frameworks

- Secure access to shared data

-

Train:

- Containerized, distributed ML/DL environments

- Auto-scale capabilities

- Prepackaged images for Python, Spark, and TensorFlow

-

Collaborate:

- Project Repository

- Model Registry

- Github integration

-

Deploy:

- Support for multiple runtime engines

- REST endpoints with token-based authorization

- Auto-scaling and load balancing

-

Monitor:

- Notebook resource utilization

- Training cluster resource monitoring

- Deployment resource monitoring

- REST input and output logs

Licensing

HPE Ezmeral ML Ops requires a separate license. See HPE Ezmeral ML Ops.