Registering HPE Ezmeral Data Fabric on Bare Metal as Tenant Storage

This procedure describes registering HPE Ezmeral Data Fabric on Bare Metal as Tenant Storage. An HPE Ezmeral Data Fabric on Bare Metal cluster is external to the HPE Ezmeral Runtime Enterprise installation. After you have installed or upgraded to HPE Ezmeral Runtime Enterprise 5.5.0 or later, you can register the same HPE Ezmeral Data Fabric on Bare Metal cluster as Tenant Storage by multiple HPE Ezmeral Runtime Enterprise instances.

Prerequisites

- The user who performs this procedure must have Platform Administrator access to HPE Ezmeral Runtime Enterprise.

- Activity must be quiesced on the relevant clusters in the HPE Ezmeral instance.

- The HPE Ezmeral Runtime Enterprise deployment must not have configured tenant storage.

- An HPE Ezmeral Data Fabric on Bare Metal cluster must have been deployed. See HPE Ezmeral Data Fabric Documentation for more details on a HPE Ezmeral Data Fabric on Bare Metal cluster.

- When deploying the Data Fabric on Bare Metal

cluster:

- Keep the UID for the

mapr userat the default of 5000. - Keep the GID for the

mapr groupat the default of 5000. - The Data Fabric (DF) cluster on Bare Metal must be a SECURE cluster.

- Data At Rest Encryption (DARE) must have been enabled on the DF cluster on Bare metal. If deploying a new DF cluster on Bare metal, enable DARE during the installation. To enable DARE on an existing Data Fabric cluster on Bare metal, see Enabling Encryption of Data at Rest.

- For compatibility information, see Support Matrixes.

- Keep the UID for the

- Data Fabric volumes which match per-tenant volume names, must not exist on the Data Fabric on Bare Metal cluster. For more information, see Administering volumes

- For Data Fabric clusters version 7.4.x only, you must apply patch equal to or newer than 20240402, which contains the fix for the bug MFS-17055. This update changes the expiration of service ticket from two weeks to LIFETIME.

About this task

- If you have an HPE Ezmeral Data Fabric on Bare Metal cluster outside the HPE Ezmeral Runtime Enterprise, and if you want to configure HPE Ezmeral Data Fabric on Bare Metal as tenant storage, continue with this procedure.

- If you have already registered another Data Fabric instance as tenant/persistent storage, do not proceed with this procedure. Contact Hewlett Packard Enterprise Support if you want to use a different Data Fabric instance as tenant storage.

- It is no longer necessary to dedicate an HPE Ezmeral Data Fabric on Bare Metal cluster to one HPE Ezmeral Runtime Enterprise installation.

- Multiple HPE Ezmeral Runtime Enterprise installations may register the same HPE Ezmeral Data Fabric on Bare Metal cluster as the backing for their tenant storage.

- On each HPE Ezmeral Runtime Enterprise installation, all tenants will have their tenant storage backed by the same registered HPE Ezmeral Data Fabric on Bare Metal cluster.

The Registration procedure described herein must be run on each HPE Ezmeral Runtime Enterprise installation.

This procedure may require 10 minutes or more per EPIC or Kubernetes host [Controller, Shadow Controller, Arbiter, Master, Worker, and so on], as the registration procedure configures and deploys Data Fabric client software on each host.

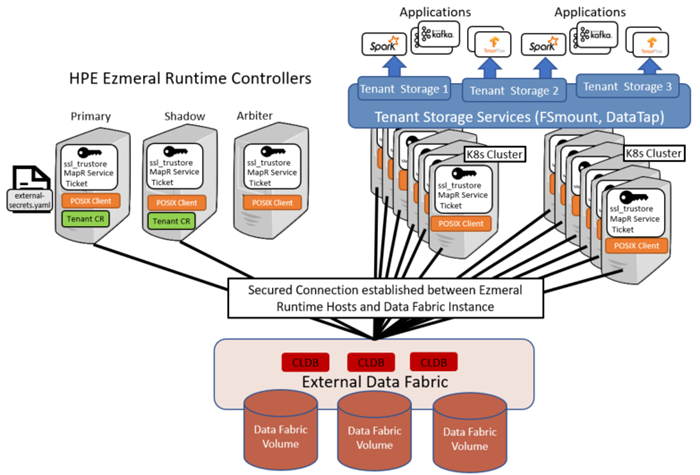

After Data Fabric registration is completed, the configuration will look as follows:

- Log in as mapr user, to a node of the HPE Ezmeral Data Fabric on Bare Metal cluster, on which the CLDB

and Apiserver services are running, and:

mkdir <working-dir-on-bm-df>/

- On the Primary Controller of HPE Ezmeral Runtime Enterprise

installation, do the following:

-

scp /opt/bluedata/common-install/scripts/mapr/gen-external-secrets.sh mapr@<cldb_node_ip_address>:<working-dir-on-bm-df>/ -

scp /opt/bluedata/common-install/scripts/mapr/prepare-bm-tenants.sh mapr@<cldb_node_ip_address>:<working-dir-on-bm-df>/ -

mkdir /opt/bluedata/tmp/ext-bm-mapr/

-

- Create a user-defined manifest for the procedure:

- If you are not specifying any keys (i.e. to generate default

values for all

keys):

touch /opt/bluedata/tmp/ext-bm-mapr/ext-dftenant-manifest.user-defined - Else, specify the following parameters:

-

cat << EOF > /opt/bluedata/tmp/ext-bm-mapr/ext-dftenant-manifest.user-defined EXT_MAPR_MOUNT_DIR="/<user_specified_directory_in_mount_path_for_volumes>" TENANT_VOLUME_NAME_TAG="<user_defined_tag_to_be_included_in_tenant_volume_names>" EOF

-

- If you are not specifying any keys (i.e. to generate default

values for all

keys):

- On the CLDB node of the HPE Ezmeral Data Fabric on Bare Metal cluster:

cd <working-path-on-bm-df>/./prepare-bm-tenants.sh

- On the Primary Controller of HPE Ezmeral Runtime Enterprise:

- Move or remove any existing

bm-info-*.tarfrom/opt/bluedata/tmp/ext-bm-mapr/ scp mapr@<cldb_node_ip_address>:< working-dir-on-bm-df>/bm-info-*.tar /opt/bluedata/tmp/ext-bm-mapr/cd /opt/bluedata/tmp/ext-bm-mapr/LOG_FILE_PATH=<log_file_path> /opt/bluedata/bundles/hpe-cp-*/startscript.sh --action ext-bm-df-registration

- Move or remove any existing

Procedure

-

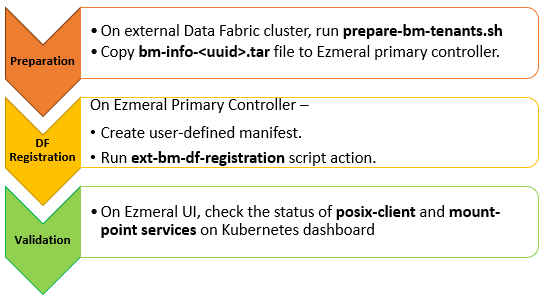

Preparation (On HPE Ezmeral Data Fabric on Bare Metal

Cluster):

-

Before Registration (On HPE Ezmeral Runtime Enterprise

Primary Controller):

Perform the following steps on the HPE Ezmeral Runtime Enterprise Primary Controller host.

-

Registration

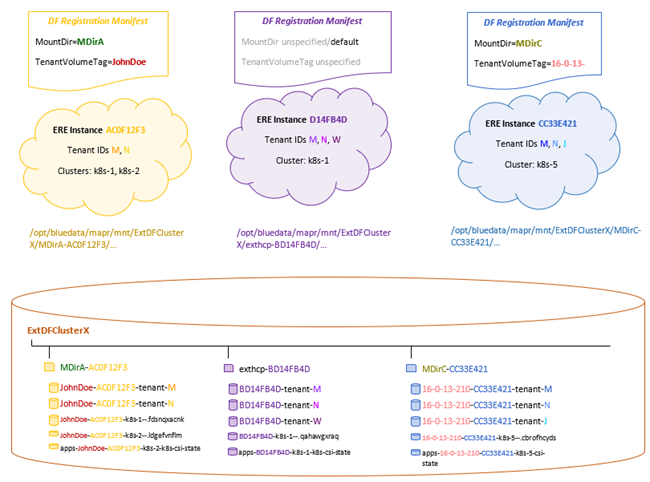

The

ext-bm-df-registrationaction represents the overall Registration procedure for External HPE Ezmeral Data Fabric on Bare Metal.Future Kubernetes clusters created in the HPE Ezmeral Runtime Enterprise will have persistent volumes located under

<df_cluster_name>/<ext_mapr_mount_dir>-<bdshared_global_uniqueid>/The registered HPE Ezmeral Data Fabric on Bare Metal cluster will be the backing for Storage Classes of future Kubernetes Compute clusters, that are created in the HPE Ezmeral Runtime Enterprise.

The registration procedure does not modify the Storage Classes for Compute clusters, which existed before the registration.

-

Validation:

To confirm that the Registration is completed, check the following:

-

Check the output and log of the

ext-bm-df-registrationaction . - On the HPE Ezmeral Runtime Enterprise Web UI, view the Tenant Storage tab on the System Settings page. Check that the information displayed on the screen is accurate for the HPE Ezmeral Data Fabric on Bare Metal cluster.

-

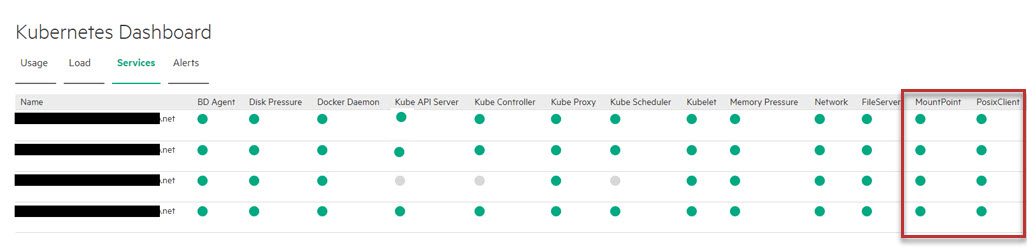

On the HPE Ezmeral Runtime Enterprise, view the

Kubernetes and EPIC

Dashboards, and ensure that the POSIX Client and Mount Path services on

all hosts are in normal state.

- On the HPE Ezmeral Runtime Enterprise web UI, as an authenticated user, check that you are able to browse Tenant Storage on an existing tenant. You can also try uploading a file to a directory under Tenant Storage, and reading the uploaded file. See Uploading and Downloading Files for more details.

-

Check the output and log of the