Airflow

Provides an overview of Apache Airflow in HPE Ezmeral Unified Analytics Software.

You can use Airflow to author, schedule, or monitor workflows and data pipelines.

A workflow is a Directed Acyclic Graph (DAG) of tasks used to handle big data processing pipelines. The workflows are started on a schedule or triggered by an event. DAGs define the order to run tasks or rerun tasks in case of failures. The tasks define the actions to be performed, such as ingest, monitor, report, and others.

To learn more, see Airflow documentation.

Airflow Functionality

Airflow in HPE Ezmeral Unified Analytics Software supports the following functionality:

-

Extracting data from multiple data sources and running Spark jobs or other data transformations.

-

Training machine learning models.

-

Automated generation of reports.

-

Backups and other DevOps tasks.

Airflow Architecture

- Airflow Operator

-

Manages and maintains Airflow Base and Airflow Cluster Kubernetes Custom Resources by creating and updating Kubernetes objects.

- Airflow Base

-

Manages the PostgreSQL database that stores Airflow metadata.

- Airflow Cluster

-

Deploys the UI and scheduler components of Airflow.

In HPE Ezmeral Unified Analytics Software, there is only one instance of Airflow per cluster and Airflow DAGs are accessed by all authenticated users.

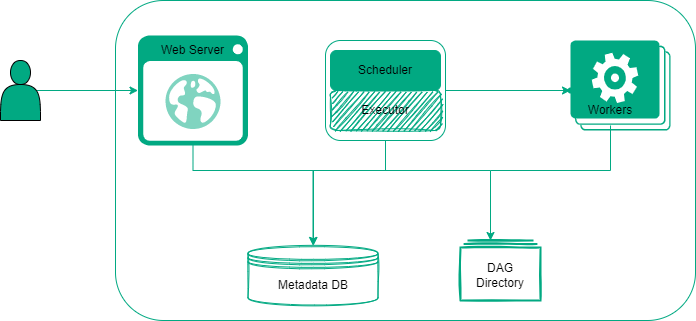

Airflow Components

- Scheduler

- Triggers the scheduled workflows and submits the tasks to an executor to run.

- Executor

- Executes the tasks or delegates the tasks to workers for execution.

- Worker

- Executes the tasks.

- Web Server

- Provides a user interface to analyze, schedule, monitor, and visualize the tasks and DAG. The Web Server enables you to manage users, roles, and set configuration options.

- DAG Directory

- Contains DAG files read by Scheduler, Executor, and Web Server.

- Metadata Database

- Stores the metadata about DAGs’ state, runs, and Airflow configuration options.

Airflow Limitations

- The CPU and memory resource limits for executors cannot be modified (CPU: 1, memory: 2Gi).

- To use the Spark Operator, you must provide the username by specifying it under the "username" key in the DAG Run Configuration.

- The logs of successfully run DAGs are available until the corresponding pods are deleted.

To learn more about Airflow, see Airflow Concepts.