Submitting Spark Applications by Using DAGs

Describes how to submit the Spark applications by using DAGs in Airflow.

Prerequisites

- Prepare the DAG for your Spark application.

- Add your DAG to the Git repository. NOTEIf you do not have the repository to store Airflow DAGs, request an administrator to configure the Git repository now. For details, see Airflow DAGs Git Repository.

- After your DAG is available in the Git repository, sign in to HPE Ezmeral Unified Analytics Software as a member.

About this task

To run the DAG to submit the Spark applications in Airflow, follow these steps:

- Navigate to the Airflow screen using either of the following methods:

- Click Data Engineering > Airflow Pipelines.

- Click Tools & Frameworks, select the Data Engineering tab, and click Open in the Airflow tile.

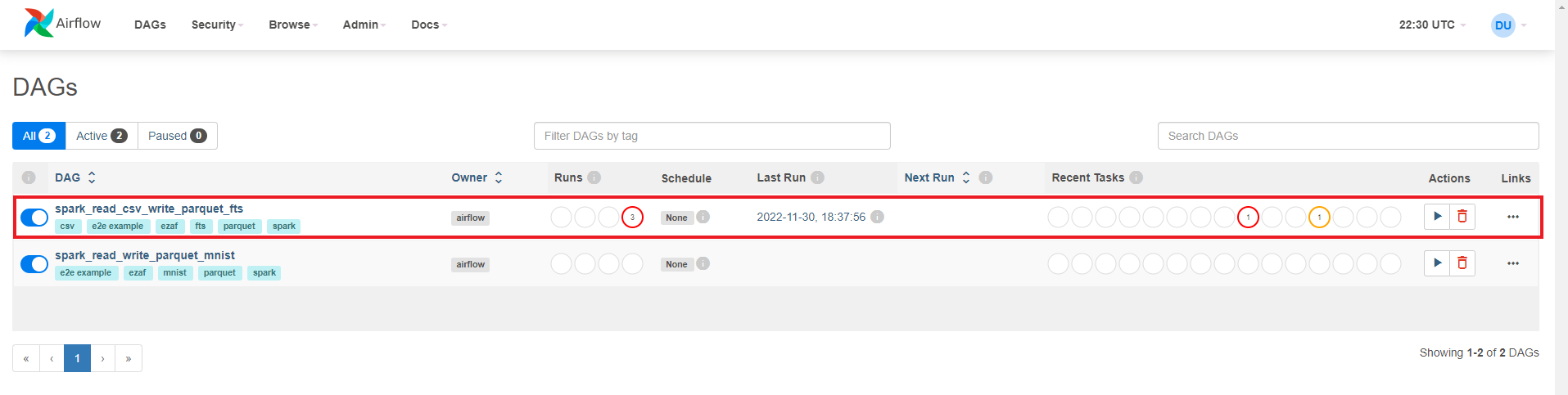

- In Airflow, verify that you are on the DAGs screen.

- Click

<your-spark-application>DAG. For example:

- Click Code to view the DAG code.

- Click Graph to view the graphical representation of the DAG.

- Click Run (play button) and select Trigger DAG w/ config to specify the custom configuration.

- To run a DAG after making configuration changes, click Trigger.

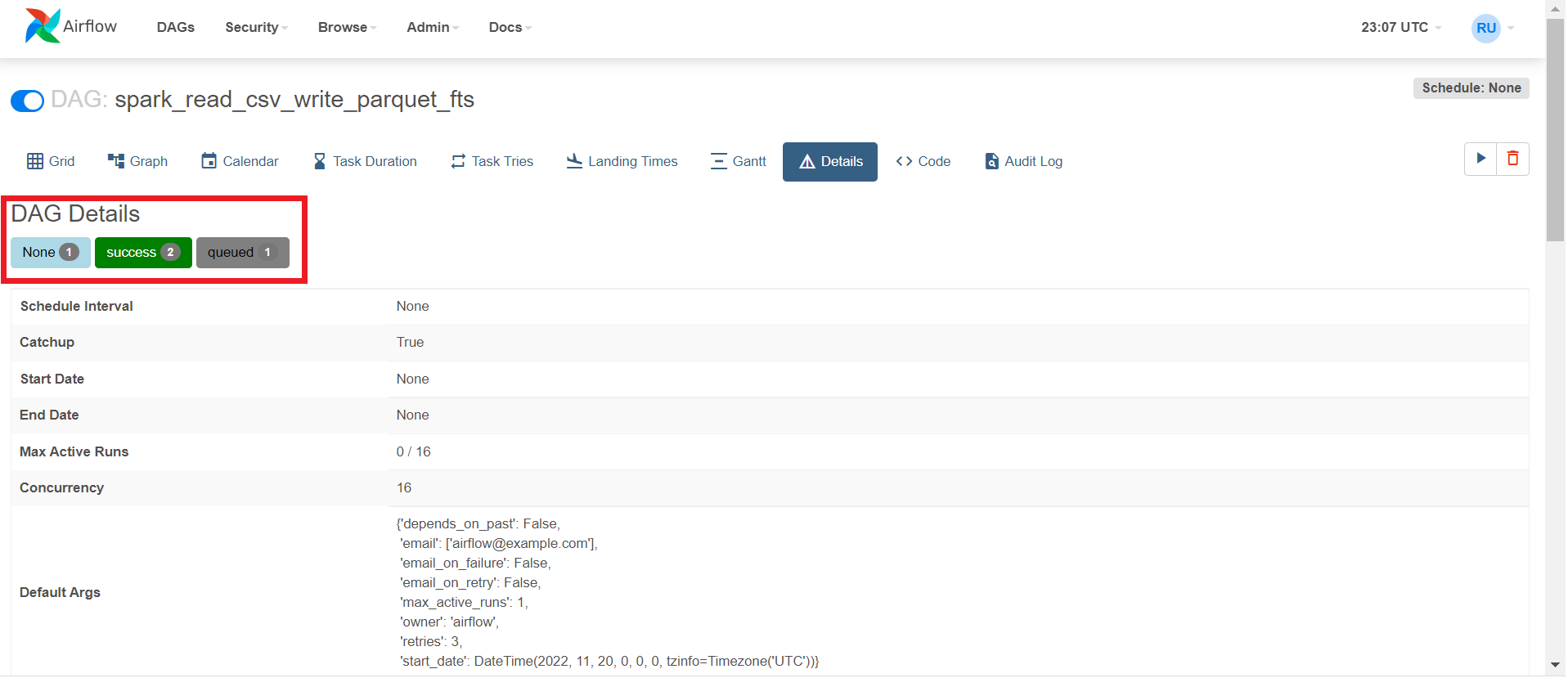

- To view details for the DAG, click Details. Under

DAG Details, you can see green, red, and/or yellow buttons with

the number of times the DAG ran successfully or failed.

- Click the Success button.

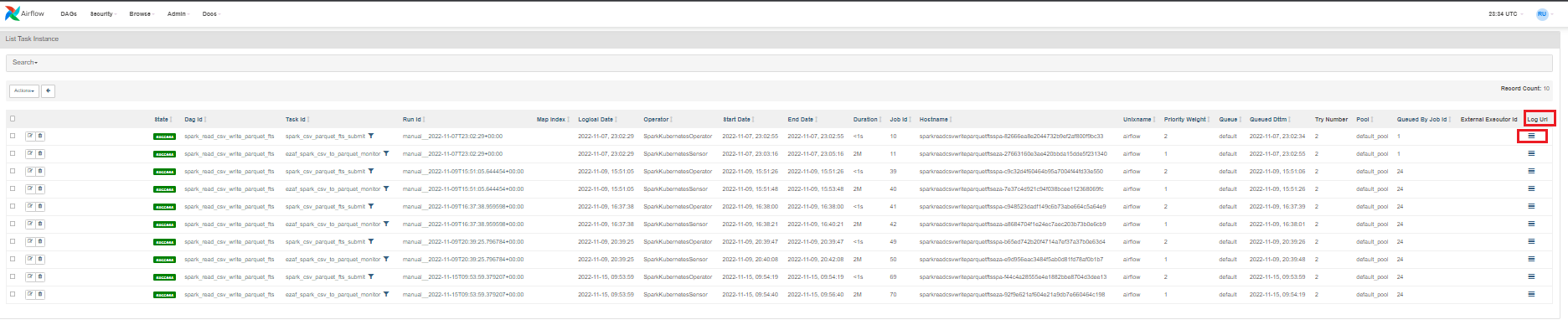

- To find your job, sort by End Date to see the latest jobs that have run, and

then scroll to the right and click the log icon under Log URL for that run. Note that

jobs run with the

configuration:

Conf "username":"your_username"When running Spark applications using Airflow, you can see the logs.IMPORTANTThe cluster clears the logs that result from the DAG runs. The duration after which the cluster clears the logs depends on the Airflow task, cluster configuration, and policy.

Results

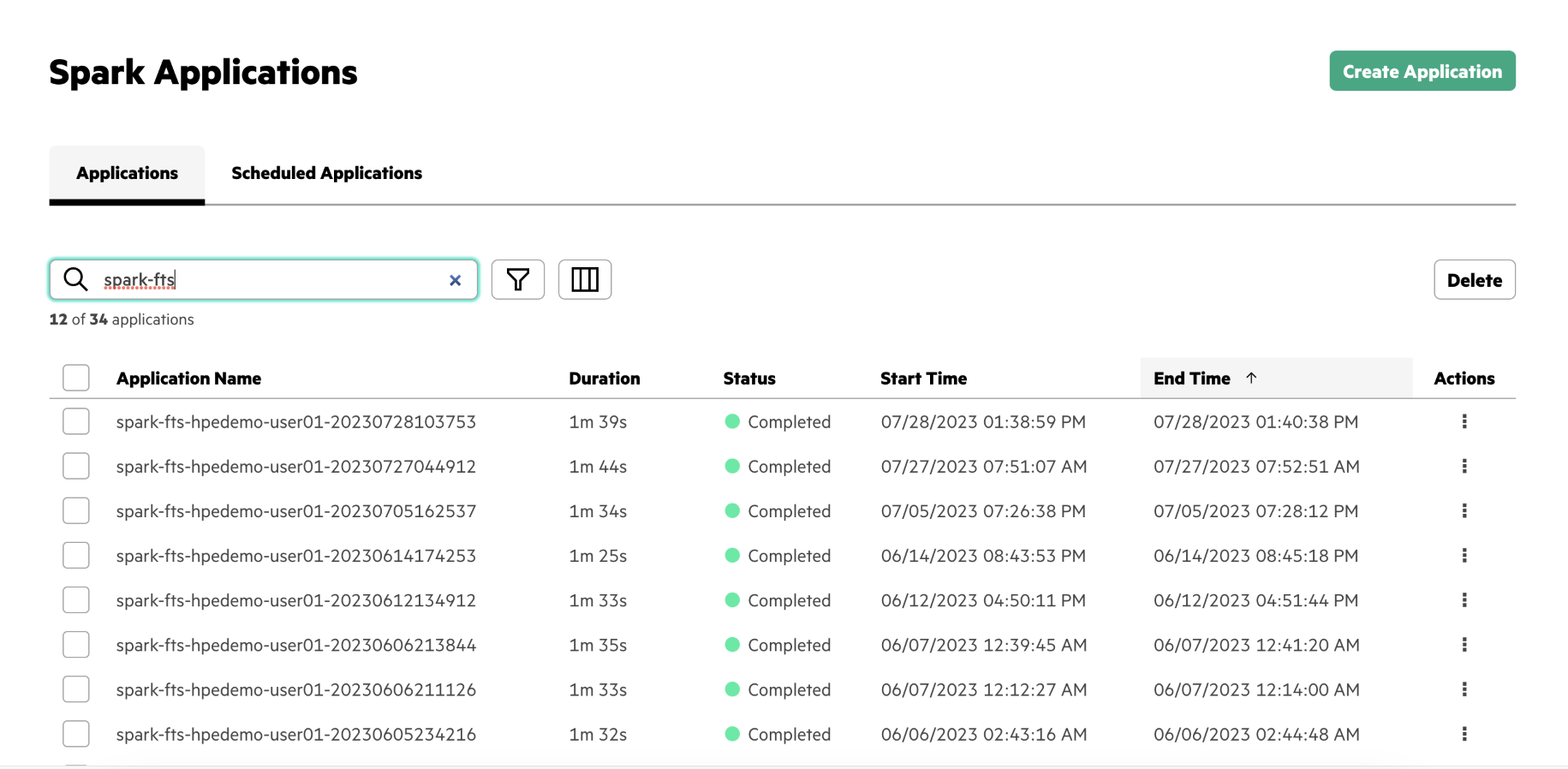

Once you have triggered the DAG, you can view the Spark application in the Spark Applications screen.

To view the Spark application, go to Analytics > Spark Applications.

Alternatively, you can go to Tools & Frameworks and then click on the Analytics tab. On the Analytics tab, select the Spark tile and click Open.