Expanding the Cluster

Describes how to add additional user-provided hosts to the management cluster to increase resource capacity and how to expand the cluster to include the additional user-provided hosts.

When applications do not have enough resources to run, the system raises an alarm to alert users of the issue. In such cases, the HPE Ezmeral Unified Analytics Software administrator and system administrator can work together to add additional user-provided hosts to the pool of machines in the management cluster (control plane nodes) and workload cluster to increase the processing capacity of the cluster.

The following steps outline the cluster expansion process:

- An application triggers an alert to users that it does not have sufficient resources to run.

- Users contact the system administrator to request additional resources (add additional user-provided hosts to the management cluster).

- A system administrator adds user-provided hosts to the host pool, as described in the section Adding User-Provided Hosts to the Host Pool.

- After the system administrator adds user-provided hosts to the hosts pool, the HPE Ezmeral Unified Analytics Software administrator signs in to the HPE Ezmeral Unified Analytics Software UI and adds the new hosts to the cluster (expands the cluster), as described in the section Expanding the Cluster (Adding New Hosts to the Cluster).

Adding User-Provided Hosts to the Host Pool

This section describes how to add control plane hosts and workload hosts to the host pool

(ezfabric-host-pool) through the ezfab-addhost.sh

script.

After a system administrator completes the steps in this section to add hosts to the host pool, the HPE Ezmeral Unified Analytics Software administrator can complete the steps to add the hosts to the cluster through the the HPE Ezmeral Unified Analytics Software UI, as described in the next section, Expanding the Cluster (Adding New Hosts to the Cluster).

- You can only add user-provided hosts to the cluster. User-provided hosts are machines that meet the installation prerequisites, as described in Installation Prerequisites.

- If you want to use the high-availabilty (HA) feature when you expand the cluster,

note that HA requires three master nodes. You must add two hosts to the

ezfabric-host-poolwith thecontrolplanerole. - If you want to increase the VCPU or VGPU resources when you expand the cluster, you

must add worker hosts or GPU hosts with enough resources (VCPU or VGPU) to

ezfabric-host-poolwith theworkerrole.

ezfabric-host-pool, complete the following steps:- From a CLI, sign in to the HPE Ezmeral Coordinator host.

-

Download the

ezfab-addhost-tool-1-4-x.tgzfile athttps://github.com/HPEEzmeral/troubleshooting/releases/download/v1.4.0/ezfab-addhost-tool-1-4-x.tgz.Use one of the following commands to download the file:curl -L -O https://github.com/HPEEzmeral/troubleshooting/releases/download/v1.4.0/ezfab-addhost-tool-1-4-x.tgzwget https://github.com/HPEEzmeral/troubleshooting/releases/download/v1.4.0/ezfab-addhost-tool-1-4-x.tgz -

Untar the

ezfab-addhost-tool-1-4-x.tgzfile:tar -xzvf ezfab-addhost-tool-1-4-x.tgz - Go to the

ezfab-addhost-tooldirectory and view its contents:

The command returns results similar to the following:cd ezfab-addhost-tool ls -altotal 50504 drwxr-xr-x. 2 501 games 149 Feb 2 09:57 . dr-xr-x---. 9 root root 4096 Feb 2 16:19 .. -rw-r--r--. 1 501 games 1211 Jan 26 18:16 controlplane_input_template.yaml -rwxr-xr-x. 1 501 games 2687 Feb 22 10:54 ezfab-addhost.sh -rwxr-xr-x. 1 501 games 51695616 Jan 26 14:05 ezfabricctl -rw-r--r--. 1 501 games 360 Jan 26 18:24 input_example.yaml -rw-r--r--. 1 501 games 1205 Jan 26 18:17 worker_input_template.yamlTIPYou should see theezfab-addhost.shlisted, as well as three YAML files (controlplane_input_template.yaml,worker_input_template.yaml, andinput_example.yaml) that you can use as guides. Use thecatcommand to view the YAML files, for example:cat controlplane_input_template.yaml - Using the provided YAML files as a guide, create a YAML

file.TIPThe only accepted values are

'controplane'and'worker'. You do not have to include any additional labels to add a new vCPU or GPU node. - Run the

ezfab-addhost.shscript:./ezfab-addhost.shWhen you run the script, the system returns the supported options:Check OS ... Parse options ... Please provide the input yaml file that includes the hosts info USAGE: ./ezfab-addhost.sh <options> Options: -i/--input: the input yaml file that includes the hosts info. -k/--kubeconfig: the coordinator's kubeconfig file(optional). - Run the

ezfab-addhost.shscript with the-iand-koptions, as shown:./ezfab-addhost.sh -i <your-input-file>.yaml -k ~/.kube/config - After the

ezfab-addhost.shscript successfully completes, run the following command to verify that the new hosts were added to theezfabric-host-pool:kubectl get ezph -AExample Output

The following example shows output after a GPU node is added to the host pool:kubectl get ezph -A NAMESPACE NAME CLUSTER NAMESPACE CLUSTER NAME STATUS VCPUS UNUSED DISKS GPUS ezfabric-host-pool 10.xxx.yyy.213 ezkf-mgmt ezkf-mgmt Ready 16 2 0 ezfabric-host-pool 10.xxx.yyy.214 ezua160 ezua160 Ready 16 2 0 ezfabric-host-pool 10.xxx.yyy.215 ezua160 ezua160 Ready 16 2 0 ezfabric-host-pool 10.xxx.yyy.216 ezua160 ezua160 Ready 16 2 0 ezfabric-host-pool 10.xxx.yyy.217 ezua160 ezua160 Ready 16 2 0 ezfabric-host-pool 10.xxx.yyy.218 ezua160 ezua160 Ready 16 2 0 ezfabric-host-pool 10.xxx.yyy.219 ezua160 ezua160 Ready 16 2 0 ezfabric-host-pool 10.xxx.yyy.220 ezua160 ezua160 Ready 16 2 0 ezfabric-host-pool 10.xxx.yyy.25 Ready 48 3 1TIP- New hosts listed in the output are not associated with a clustername or namespace. This is expected, as new hosts remain in the host pool and are not associated with a cluster until the hosts are added to the cluster, as described in Expanding the Cluster (Adding New Hosts to the Cluster).

- If the

ezfab-addhost.shscript fails, check the logs in the log directory. - If the failure is due to the wrong username, password, or some transient

error, run the following command to delete the hosts in the error state and then

retry:

./ezfabricctl poolhost destroy --input $INPUT_YAML_FILE --kubeconfig $KUBECONFIG_FILE- Note that the

INPUT_YAML_FILEis different from the YAML file in step 7, as it only includes the failed host. After the failed hosts have been deleted, modify the<your-input-file>.yamlfrom step 7 and then complete step again 7 to re-add the failed hosts. - Do not run

./ezfabricctl poolhost destroyafter you expand the cluster.

- Note that the

- Go to the Expanding the Cluster (Adding New Hosts to the Cluster) section (below) and follow the steps to trigger the cluster expansion from the HPE Ezmeral Unified Analytics Software UI.

Expanding the Cluster (Adding New Hosts to the Cluster)

This section describes how the HPE Ezmeral Unified Analytics Software administrator adds new hosts to the HPE Ezmeral Unified Analytics Software cluster through the HPE Ezmeral Unified Analytics Software UI.

kubectl get ezkfopsexpand -A

# (lists the Expand CR names and namespaces)kubectl delete ezkfopsexpand -n <expand_CR_namespace> <expand_CR_name>To expand the cluster, complete the following steps:

- In the left navigation bar, select Administration > Settings.

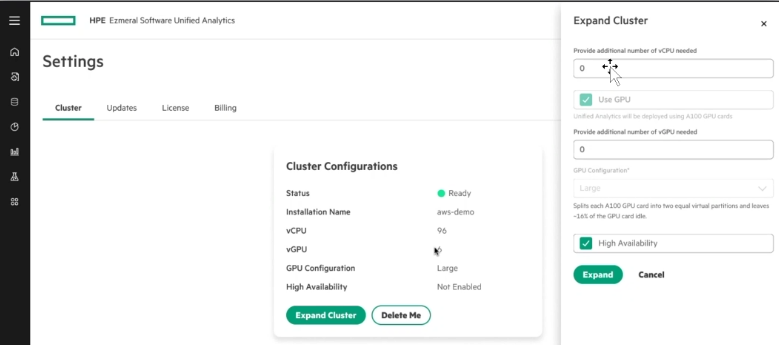

- On the Cluster tab, select Expand Cluster.

- In the Expand Cluster drawer that opens, enter the following information:

- Number of additional vCPU to allocate. For example, if the current vCPU is 96 and you add 4 vCPU, the vCPU increases to a total of 100 vCPU.

- Select Use GPU if you want to use GPU and it is not already selected. If Use GPU was selected during installation of HPE Ezmeral Unified Analytics Software, this option cannot be disabled and stays selected by default.

- Indicate the additional number of vGPU to allocate.

- For GPU configuration, if a size was selected during HPE Ezmeral Unified Analytics Software installation, you cannot change the size. However, if no vGPU size was selected during installation, you can select a size now. For additional information, see GPU Support.

- If HA was selected during HPE Ezmeral Unified Analytics Software installation, you cannot disable it. If it was not selected during installation, you can select it now. Currently HA is available for the workload cluster only. You cannot set HA for the management cluster.

- Click Expand.

Configuring HPE MLDE for Added GPU Nodes

If you add GPU nodes to the cluster after installing HPE MLDE, you must perform additional steps to ensure HPE MLDE works on these nodes. For details, see Configuring HPE MLDE for Added GPU Nodes.