Configuring a Spark Application to Access Data in an External S3 Data Source through the S3 Proxy Layer

Describes how to configure a Spark application to connect to an external S3 data source through the S3 proxy later in HPE Ezmeral Unified Analytics Software.

sparkConf section of the Spark application YAML file: - Data source name

- Endpoint URL

- Secret (to securely pass configuration values)

- Bucket that you want the client to access

Once connected, the Spark application can:

- Read and download files in a bucket

- Upload files from a bucket

- Create buckets

Getting the Data Source Name and S3 Proxy Endpoint URL

To get the data source name and S3 proxy endpoint URL:

- Sign in to HPE Ezmeral Unified Analytics Software.

- In the left navigation bar, select Data Engineering > Data Sources.

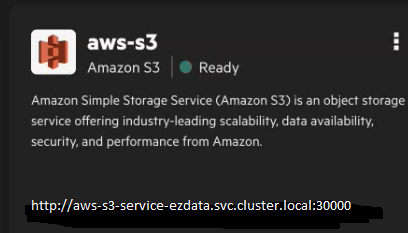

- On the Data Sources page, find the tile for the S3 data source that you want the

Spark application to connect to.

The following image shows an example of a tile for an AWS S3 data source with the name (aws-s3) and the enpoint URL (http://aws-s3-service-ezdata.svc.cluster.local:30000):

NOTEBy default, a local-s3 Ezmeral Data Fabric tile also displays on the screen. This Ezmeral Data Fabric version of S3 is a local S3 version used internally by HPE Ezmeral Unified Analytics Software. Do not connect to this data source.

NOTEBy default, a local-s3 Ezmeral Data Fabric tile also displays on the screen. This Ezmeral Data Fabric version of S3 is a local S3 version used internally by HPE Ezmeral Unified Analytics Software. Do not connect to this data source. - Note the data source name and endpoint URL and then use them in the Spark configuration.

Configuring Spark

- If you used the HPE-Curated Spark image to create the Spark application, see HPE-Curated Spark.

- If you used the Spark OSS image to create the Spark application, see Spark OSS.

HPE-Curated Spark

Use these instructions if you are configuring a Spark application using the HPE-Curated Spark image.

Using the EzSparkAWSCredentialProvider option in the configuration

automatically generates the secret for you.

sparkConf:

spark.hadoop.fs.s3a.endpoint: <S3-endpoint>

spark.hadoop.fs.s3a.connection.ssl.enabled: "true"

spark.hadoop.fs.s3a.impl: org.apache.hadoop.fs.s3a.S3AFileSystem

spark.hadoop.fs.s3a.aws.credentials.provider: "org.apache.spark.s3a.EzSparkAWSCredentialProvider"

spark.hadoop.fs.s3a.path.style.access: "true"sparkConf

section:spark.driver.extraJavaOptions: -Djavax.net.ssl.trustStore=/etc/pki/java/cacerts

spark.executor.extraJavaOptions: -Djavax.net.ssl.trustStore=/etc/pki/java/cacertsSpark OSS

Use these instructions if you are configuring a Spark application using the Spark OSS image.

- Generate a secret.

Use either of the following methods to generate a secret:

- Apply a YAML

- Use a notebook to create a Kubernetes secret with Base64-encoded values for

the AWS_ACCESS_KEY_ID (username) and AWS_SECRET_ACCESS_KEY (password). For example, run

kubectl apply -ffor the following YAML:

See Creating and Managing Notebook ServersapiVersion: v1 kind: Secret data: AWS_ACCESS_KEY_ID: <Base64-encoded value; example: dXNlcg== > AWS_SECRET_ACCESS_KEY: <Base64-encoded value; example:cGFzc3dvcmQ= > metadata: name: <K8s-secret-name-for-S3> type: Opaque - Run a Script in a Notebook

- Run the following script in a notebook to generate the secret. You can also

access a sample script from your notebook server in the

shared/ezua-tutorials/Data-Analytics/directory.def deploy_s3_secret(namespace, spark_secret): try: #Run kubectl apply command using subprocess subprocess.run(['kubectl', 'delete', 'secret', spark_secret, '-n', namespace], check=False) subprocess.run(['kubectl', 'create', 'secret', 'generic', spark_secret, '-n', namespace , '--from-file=spark-defaults.conf'], check=True) print("Secret creation successful!") except subprocess.CalledProcessError as e: print(f"Secret creation failed. Error: {e}") s3_access_data = "spark.hadoop.fs.s3a.access.key EXAMPLE_ACCESS_KEY" s3_secret_data = "spark.hadoop.fs.s3a.secret.key EXAMPLE_SECRET_KEY" s3_data = s3_access_data.replace('EXAMPLE_ACCESS_KEY', os.environ['AUTH_TOKEN']) s3_data += "\n" + s3_secret_data.replace("EXAMPLE_SECRET_KEY", "s3") namespace = os.environ['USER'] spark_secret = "spark-s3-secret" #Save data to a file spark-defaults.conf with open('spark-defaults.conf', 'w') as file: file.write(s3_data) # Call the function to deploy the Kubernetes secret deploy_s3_secret(namespace, spark_secret)

- Configure the Spark application.The following example demonstrates how to add the required fields to the

sparkConfsection of the Spark application YAML file:

(AWS S3 Only) If you are connecting the Spark application to an AWS S3 data source, you must also include the following options in thesparkConf: spark.hadoop.fs.s3a.connection.ssl.enabled: "true" spark.hadoop.fs.s3a.endpoint: <S3-endpoint> spark.hadoop.fs.s3a.impl: org.apache.hadoop.fs.s3a.S3AFileSystem spark.hadoop.fs.s3a.path.style.access: "true"sparkConfsection:spark.driver.extraJavaOptions: -Djavax.net.ssl.trustStore=/etc/pki/java/cacerts spark.executor.extraJavaOptions: -Djavax.net.ssl.trustStore=/etc/pki/java/cacerts