NVIDIA GPU Monitoring

HPE Ezmeral Runtime Enterprise includes an hpecp-nvidiagpubeat add-on that is deployed by default on non-imported Kubernetes clusters. The hpecp-nvidiagpubeat add-on deploys the nvidiagpubeat DaemonSet, which deploys an nvidiagpubeat collector pod on each worker node with one or more NVIDIA GPUs. The collector pod collects GPU metrics such as GPU utilization, GPU memory usage, GPU temperature, and other metrics per GPU device and worker node.

For more information about nvidiagpubeat, see nvidiagpubeat.

GPU Charts and Statistics

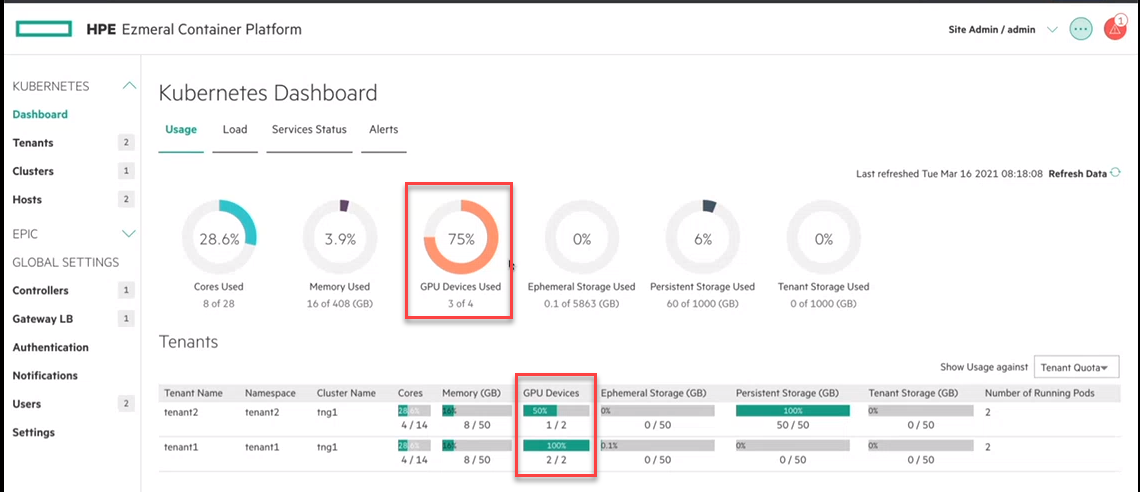

HPE Ezmeral Runtime Enterprise displays GPU metrics on the Usage tab of the Kubernetes Dashboard. The Usage tab shows allocated GPUs vs. total available or GPU quota per tenant.

For cluster administrators and Platform Administrators, the tab shows the GPU devices used system wide. The tenant table shows the GPU devices in use per tenant:

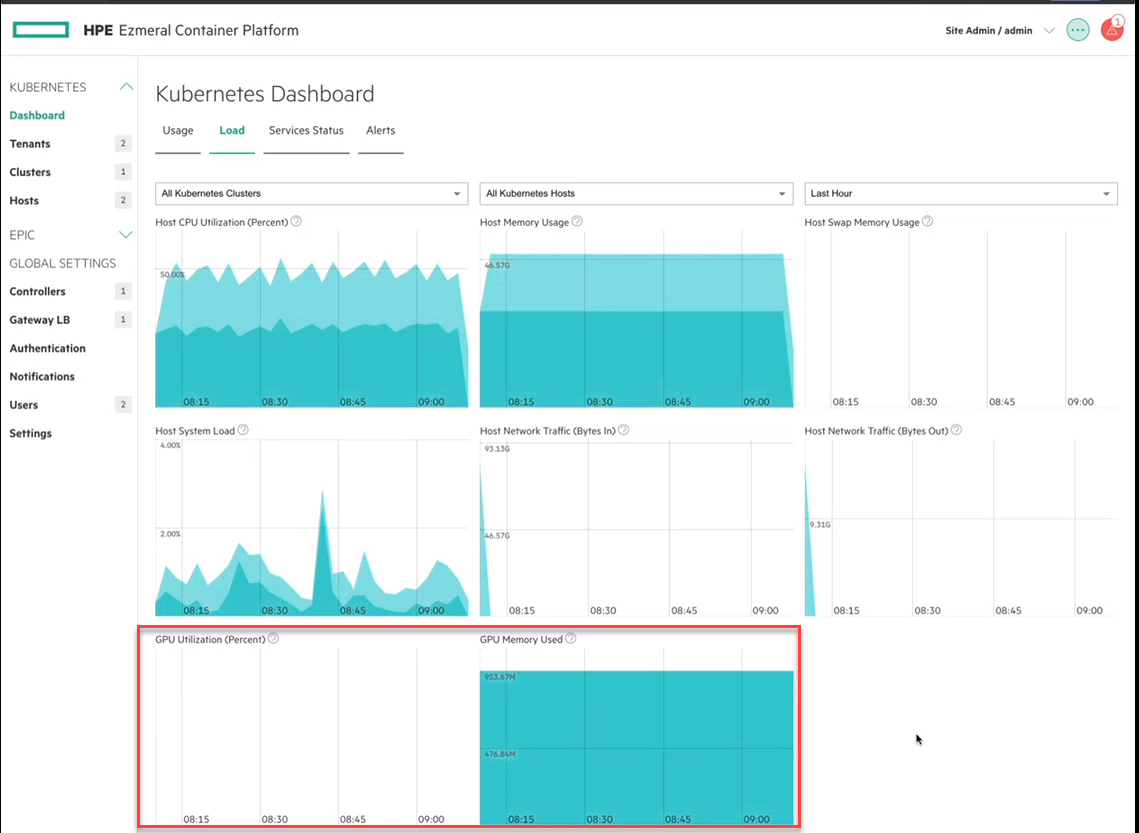

The tab shows new graphs for GPU utilization and GPU memory used:

nvidiagpubeat Add-On Installation

The hpecp-nvidiagpubeat add-on is a required system add-on and is deployed by default on Kubernetes clusters.

On each host that contains GPUs, you must install an OS-compatible GPU driver that supports your GPU model. You must install the driver before adding the GPU host to HPE Ezmeral Runtime Enterprise. For installation instructions, see GPU Driver Installation.

The number of GPU hosts that you add determines the number of collector pods that are created and deployed on the cluster. For example, if your Kubernetes cluster contains one master node (non-GPU machine) and one worker node (GPU machine), one nvidiagpubeat pod is deployed.

nvidiagpubeat and Imported Clusters

The hpecp-nvidagpubeat add-on is not supported for imported clusters.

Logs for the nvidiagpubeat Pods

kubectl -n kube-system logs <nvidiagpubeat-pod-name>kubectl -n kube-system logs <nvidiagpubeat-pod-name> > <nvidiagpubeat-pod-name>.log