GPU Support for Ray

Describes how to enable GPU, configure the GPU resources, and disable GPU for Ray.

Sign in as Administrator to HPE Ezmeral Unified Analytics Software to enable GPU to submit GPU-accelerated jobs with Ray.

- Enabling GPU support during HPE Ezmeral Unified Analytics Software installation.

- Enabling GPU support after HPE Ezmeral Unified Analytics Software installation.

Enabling GPU Support During HPE Ezmeral Unified Analytics Software Installation

To enable the GPU for Ray during the HPE Ezmeral Unified Analytics Software installation, see GPU Support.

If you enabled GPU during the platform installation, you do not need to separately enable GPU for Ray. The platform installation automatically enables GPU for all applications and frameworks including Ray.

Enabling GPU Support and Configuring Resources After HPE Ezmeral Unified Analytics Software Installation

> kubectl -n kuberay get pod

NAME READY STATUS RESTARTS AGE

kuberay-head-5c2jj 2/2 Running 0 10m

kuberay-operator-7b976fdb86-x5k4c 1/1 Running 0 10mThe operator pod creates the head pod and monitors the cluster. The head pod is the cluster master and generates additional small worker pods as required.

To enable GPU support for Ray after HPE Ezmeral Unified Analytics Software installation, follow these steps:

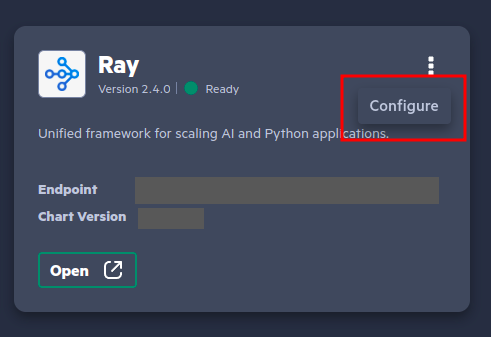

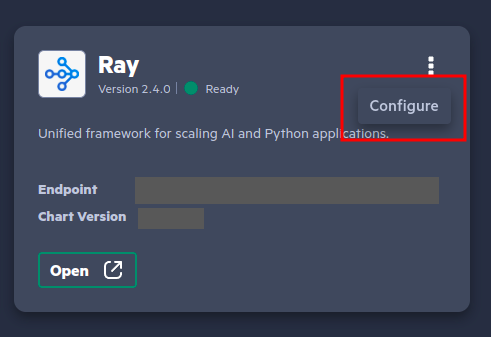

- Click the Tools & Frameworks icon on the left navigation bar. Navigate to the Ray tile under the Data Science tab.

- Click the three dots menu on the Ray tile and click

Configure.

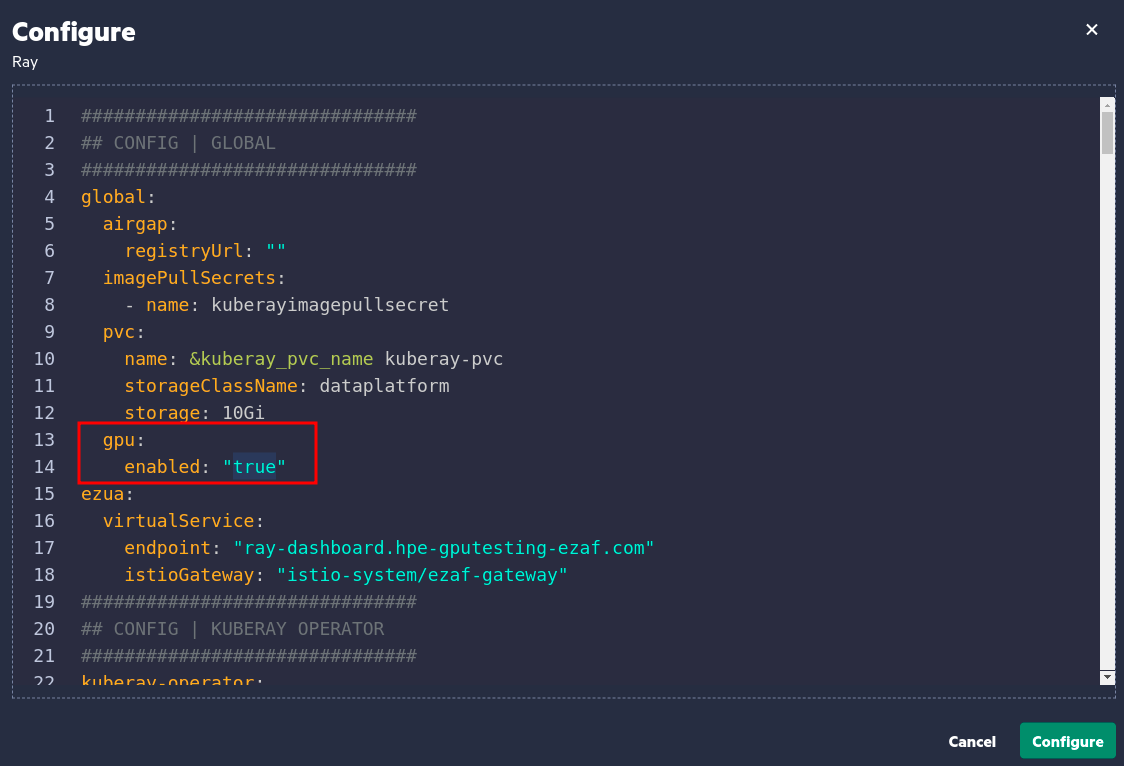

- Set the value of

gpu.enabledtotrue.

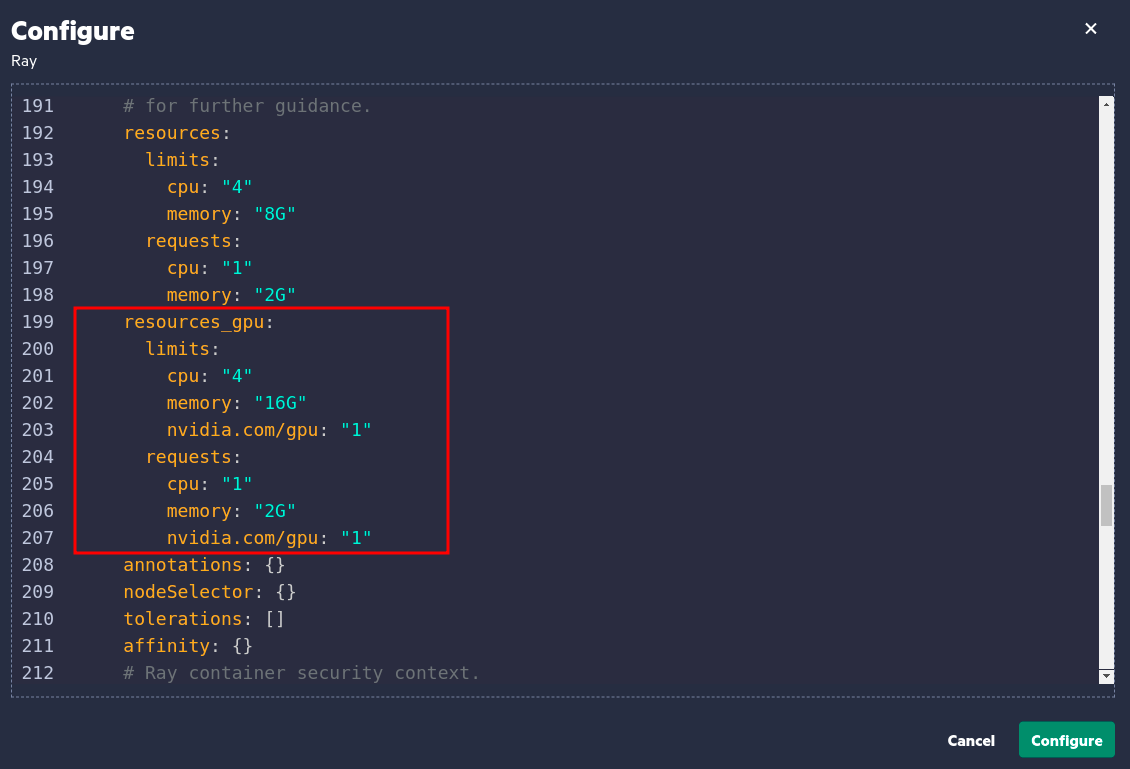

- (Optional) Modify the available resources as required by updating the values within the

resources_gpusection.NOTEWith MIG configuration, only one GPU can be assigned per application. To learn more on what happens when you assign more than one GPU to the Ray cluster, see GPU. For details regarding GPU, see GPU Support.

- Click Configure.

Results:

> kubectl -n kuberay get pod

NAME READY STATUS RESTARTS AGE

kuberay-head-5c2jj 2/2 Running 0 10m

kuberay-operator-7b976fdb86-x5k4c 1/1 Running 0 10m

kuberay-worker-smallgroup-xhhbq 1/1 Running 0 10m

kuberay-worker-workergroup-rdptj 1/1 Running 0 13s #New pod with GPU resources!Unlike the worker-smallgroup pod, the worker-workergroup

pod cannot be scaled using an autoscaler. When GPU-accelerated jobs are submitted, the

worker-workergroup pod handles the workload. Simultaneously, Ray manages

regular jobs by using the worker-smallgroup pod.

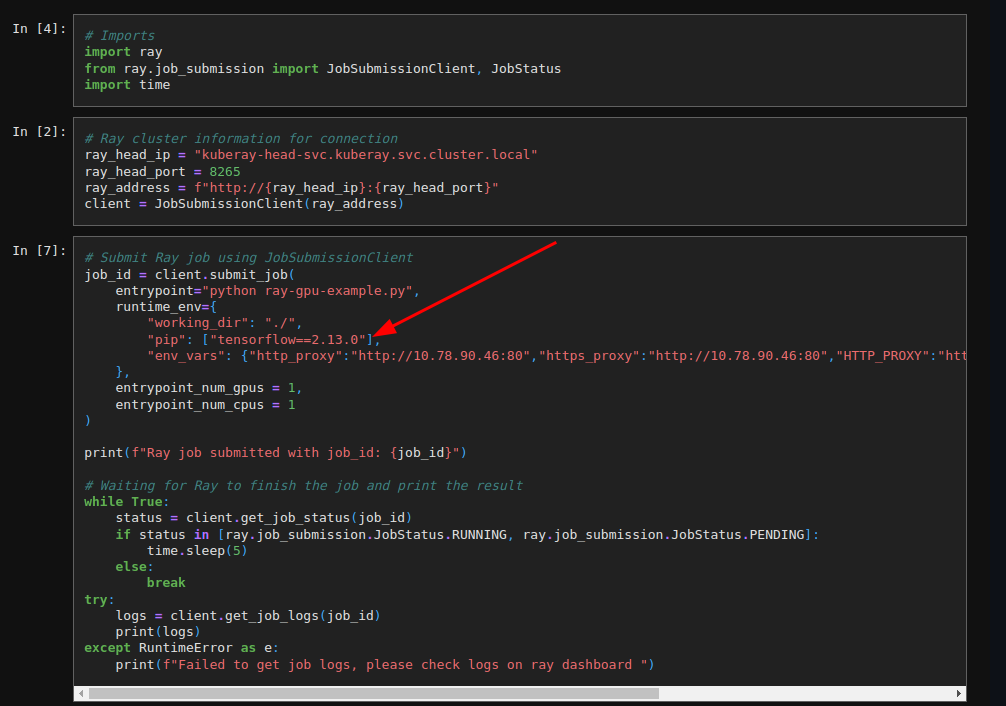

Submitting GPU-Accelerated Jobs to the Ray Cluster

@ray.remote(num_gpus=1)

def use_gpu():

print("ray.get_gpu_ids(): {}".format(ray.get_gpu_ids()))

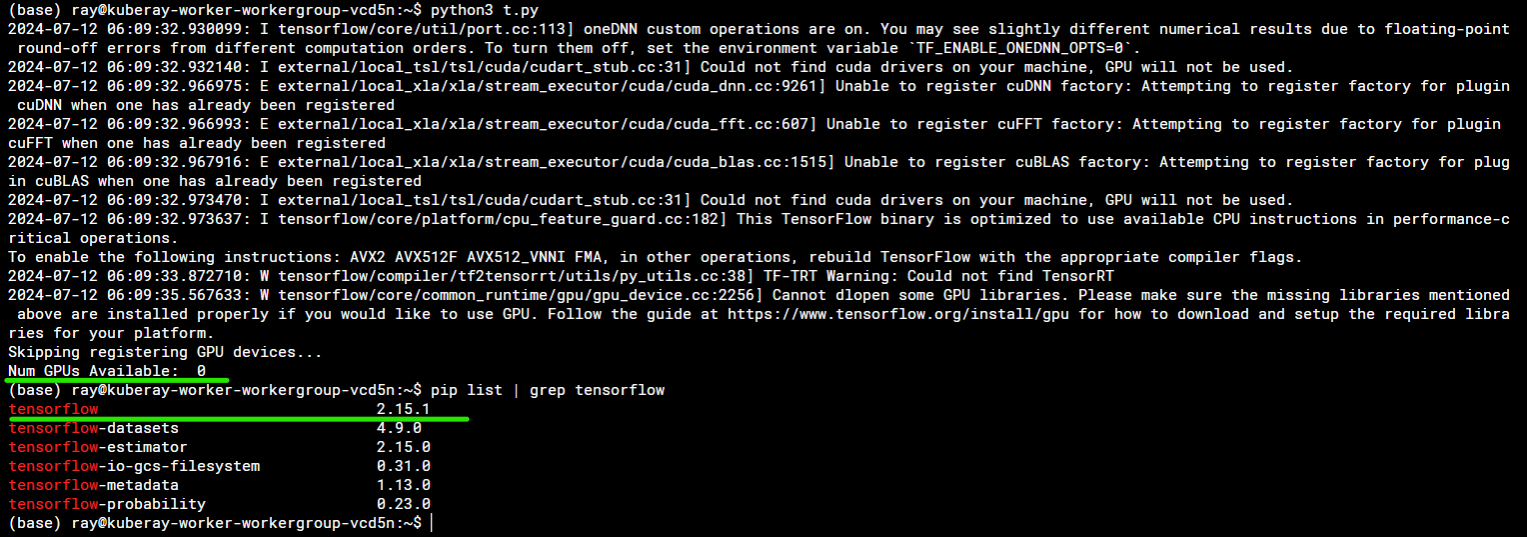

print("CUDA_VISIBLE_DEVICES: {}".format(os.environ["CUDA_VISIBLE_DEVICES"]))The function use_gpu does not use any GPUs directly. Instead, Ray

schedules it on a node with at least one GPU and allocates one GPU specifically for its run.

However, it is up to the function to utilize the GPU, which is typically done through an

external library such as TensorFlow.

Ray example using GPUs:

@ray.remote(num_gpus=1)

def use_gpu():

import tensorflow as tf

# Create a TensorFlow session. TensorFlow will restrict itself to use the

# GPUs specified by the CUDA_VISIBLE_DEVICES environment variable.

tf.Session()

To learn more, see Using Ray with GPUs.

Disabling GPU Support for Ray

To disable GPU support for Ray after HPE Ezmeral Unified Analytics Software installation, follow these steps:

- Click the Tools & Frameworks icon on the left navigation bar. Navigate to the Ray tile under the Data Science tab.

- Click the three dots menu on the Ray tile and click

Configure.

- Set the value of

gpu.enabledtofalse. - Click Configure.