Requirements for HPE Ezmeral Data Fabric on Kubernetes — Recommended Configuration

Describes the minimum system requirements for using HPE Ezmeral Data Fabric on Kubernetes in HPE Ezmeral Runtime Enterprise.

You can configure HPE Ezmeral Data Fabric on Kubernetes with any of the following configurations:

- Recommended Configuration: Explained in this topic.

- Footprint-Optimized Configuration. See Requirements for HPE Ezmeral Data Fabric on Kubernetes — Footprint-Optimized Configurations

Recommended Configuration

| Configuration | Recommended Minimum CPU Cores | Recommended Minimum RAM |

|---|---|---|

| 3 Masters + 5 Workers NOTE 5

Worker nodes are minimum requirement. HPE recommends using

6 or more Worker nodes as it increases the High Availability

(HA) of the cluster. |

||

| Masters (Requirements are the same as the general requirements for Master Hosts) | 4 per node (12 cores total) | 32GB per node (96GB total) |

| Workers | 32 per node (160 cores total) | 64GB per node (320GB total) |

| 1 or more Compute nodes (required if running compute jobs on the cluster.) | 32 per node (32 cores total) | 64 GB per node (64GB per node) |

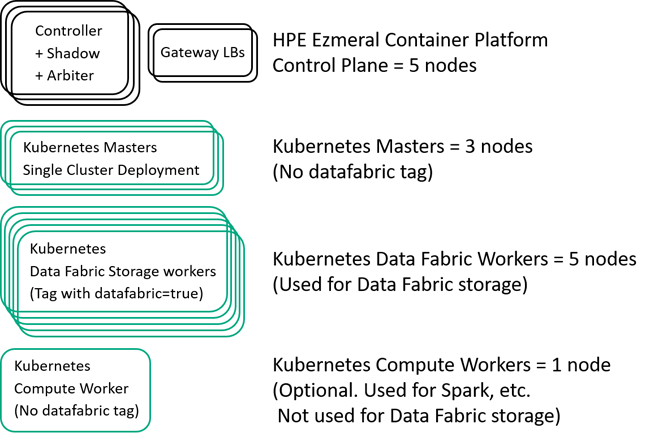

The minimum deployment that includes HPE Ezmeral Data Fabric on Kubernetes is a single Kubernetes cluster.

The following diagram shows the hosts in a minimum production deployment of HPE Ezmeral Runtime Enterprise with HPE Ezmeral Data Fabric on Kubernetes. The minimum deployment is a single Kubernetes cluster.

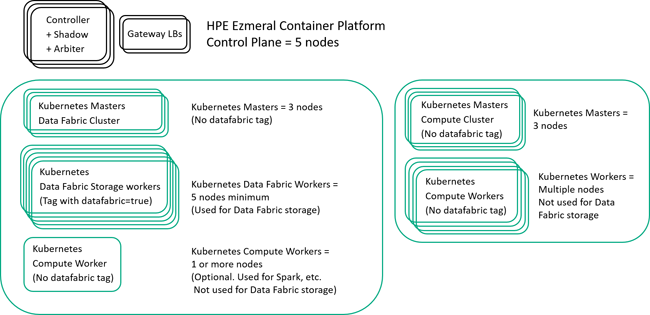

In contrast, if the HPE Ezmeral Runtime Enterprise deployment has separate clusters for HPE Ezmeral Data Fabric on Kubernetes and for compute, Kubernetes Masters and Workers are required for each Kubernetes cluster. The following diagram shows an example of a multiple cluster deployment.

In production deployments of HPE Ezmeral Runtime Enterprise, HPE Ezmeral Data Fabric on Kubernetes has the following minimum requirements:

-

Master Nodes:

The number of master nodes depends on the number of Kubernetes clusters in this deployment of HPE Ezmeral Runtime Enterprise. Each Kubernetes cluster requires a minimum of three (3) Kubernetes Master nodes for HA.

For example, if this is a single-cluster Kubernetes deployment of HPE Ezmeral Runtime Enterprise, a minimum total of three (3) Kubernetes Master nodes are required.

You must select an odd number of Master nodes in order to have a quorum (e.g. 3, 5, 7, etc.). Hewlett Packard Enterprise recommends selecting three or more Master nodes to provide High Availability protection for the Data Fabric cluster.

Master nodes orchestrate Kubernetes cluster and are not used for data storage. Master nodes cannot use the

Datafabrictag. -

Worker Nodes: The minimum is five Worker nodes, each with the

Datafabrictag set totrueoryes. These Worker nodes are used for Data Fabric storage.Worker nodes that will be used for data storage must have the

Datafabrictag set toyes(Datafabric=yes). TheDatafabrictag may also be set toYES,true, orTRUE.NOTEYou must have at least five (5) Data Fabric Worker nodes for storage (tagged withDatafabric=trueoryes) in a Data Fabric cluster to ensure High Availability protection. You may reduce resource requirements by turning off monitoring services, if they are not needed. See User-Configurable Configuration Parameters.If you want to install application add-ons, such as Spark, in the same cluster, you need at least one (1) additional Worker node that does not have the

Datafabrictag. This Worker node is called a Compute node within a Data Fabric cluster.For example, if you want to use an application such as Spark, Airflow, or Kubeflow in the same cluster as the

Datafabricnodes, you will need at least one Compute node, for a total of six (6) Worker nodes at minimum. Tenants using the node only require enough compute resources to run Spark service containers.

Ephemeral Storage Requirements

Each host must have a minimum of 500GB of ephemeral storage available to the OS.

Persistent Storage Requirements

For persistent storage, minimum requirement is One disk (hard disk, SSD, or NVMe drive) per Data Fabric node. However, HPE recommends having three or more disks on each Data Fabric node, to allow at least one full storage pool per node, for production environments.

If a Data Fabric node has single disk, and the disk fails, data recovery may be slower and impact the performance, as replication copies are stored on other nodes. Hence, HPErecommends multiple disks in a Data Fabric node, which allows a full storage pool in a node.

NVMe support on EC2 instances depends on the release of HPE Ezmeral Runtime Enterprise (see On-Premises, Hybrid, and Multi-Cloud Deployments).

Other Requirements

- Container Runtime: Docker.

- Kubernetes: See the Kubernetes Version Requirements.

-

SELinux Support: Nodes that are part of HPE Ezmeral Data Fabric on Kubernetes require SELinux to be run in "permissive" (or "disabled") mode. If you need to run SELinux in "enforcing" mode, contact your Hewlett Packard Enterprise support representative.

- CSI Driver: For usage considerations, see Using the CSI. To deploy the CSI driver on a Kubernetes cluster, the Kubernetes cluster must allow privileged pods. Shared Docker mounts must be allowed for mount propagation. See the Kubernetes CSI documentation and OS Configurations for Shared Mounts (links open external websites in a new browser tab/window).