NFS Support

HPE Ezmeral Runtime Enterprise supports the NFSv3 service in MFS pods.

Enabling or Disabling NFSv3

To enable NFSv3, add the nfs: true entry to the dataplatform YAML file. The full.yaml and simple.yaml example files include this entry by

default.

gateways:

nfs: true

mast: true

objectstore:

image: objectstore-2.0.0:202101050329C

zones:

- name: zone1

count: 1

sshport: 5010

size: 5Gi

fspath: ""

hostports:

- hostport: 31900

requestcpu: "1000m"

limitcpu: "1000m"

requestmemory: 2Gi

limitmemory: 2Gi

requestdisk: 20Gi

limitdisk: 30Gi

loglevel: INFONFSv3 support is disabled if either:

- You specify

nfs: false. - The

nfs: < true | false >entry is not present.

Considerations

When working with NFSv3 in HPE Ezmeral Runtime Enterprise:

- The default share is

/mapr. To change the default share, see Customizing NFS, below. - If the MFS pod hosting the NFSv3 service stops and then restarts on a different node, then the NFSv3 mounts will stop working.

- The default NFS server port is 2049. Do not change the default port.

- You must mount the NFS drive manually; auto-mount is not supported.

Known Issues

The following issues are known to exist with maprcli commands for

NFS:

maprcli setloglevel nfs -loglevelormaprcli trace infoortracelevel: DEBUG- Command does not work or returns an exception. The default trace levels are:NFSD : INFONFSDProfile : INFONFSExport : ERRORNFSHandle : ERROR

maprcli nfsmgmt- No response from the NFS server.maprcli node services -nfs start|stop|restart|enable|disable- Command has no effect.maprcli alarm list- An alarm is generated that indicates the wrong NFS version.

Customizing NFS

You can customize NFS behavior by modifying the exports and nfsserver.conf sections of the config map (template-mfs-cm.yaml), which is located at bootstrap/customize/templates-dataplatform/template-mfs-cm.yaml. Any

configuration changes must be made before bootstrapping. For example, to change the

share name, you must change the /mapr (rw): entry in the exports: section of the config map:

exports: |

# Sample Exports file

# for /mapr exports

# <Path> <exports_control>

#access_control -> order is specific to default

# list the hosts before specifying a default for all

# a.b.c.d,1.2.3.4(ro) d.e.f.g(ro) (rw)

# enforces ro for a.b.c.d & 1.2.3.4 and everybody else is rw

# special path to export clusters in mapr-clusters.conf. To disable exporting,

# comment it out. to restrict access use the exports_control

#

/mapr (rw)

#to export only certain clusters, comment out the /mapr & uncomment.

#/mapr/clustername (rw)

.

.

.

nfsserver.conf: |

# Configuration for nfsserver

#

# The system defaults are in the comments

#

# Default compression is true

#Compression = true

# chunksize is 64M

#ChunkSize = 67108864

# Number of threads for compression/decompression: default=2

#CompThreads = 2

#Mount point for the ramfs file for mmap

#RamfsMntDir = /ramfs/mapr

.

.

.Please refer to Managing the HPE Ezmeral Data Fabric NFS Service for information about managing the NFS service (link opens an external website in a new browser tab/window).

Configuring Client Access

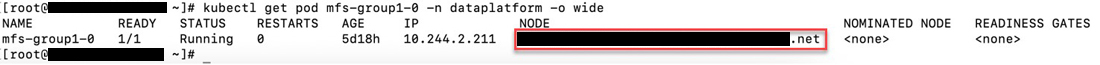

To configure clients to connect to NFS on the Kubernetes cluster network, locate the

node on which the MFS pod is running, such as the mfs-group1-0

pod shown below. The network location where NFS is running is the NODE value for the MFS pod:

In this example, the command to mount your share on the data-fabric cluster in Kubernetes is:

user-vbox2:~$ m2-sm2028-15-n4.mip.storage.hpecorp.net:/mapr