Manually Creating a New HPE Ezmeral Data Fabric Tenant

Tenants connect to either an internal Data Fabric cluster or an external storage cluster.

- Creating a tenant that connects to an internal Data Fabric cluster begins with submitting a tenant CR in the same Kubernetes environment as the Data Fabric cluster.

- Creating a Tenant that connects to an external storage cluster begins with setting up and deploying the external storage cluster and user, server, and client secrets before submitting a Tenant CR. During the bootstrapping phase, the installer deploys the tenant operator that can be used to build the tenant CRs required to build the tenant namespaces in the Kubernetes environment. In this scenario, the external storage cluster must be visible from the pods running in the cluster where you plan to create the tenant. Verify connectivity by opening a shell to a running pod on the Kubernetes cluster and then pinging nodes on the storage cluster.

Tenant CR Parameters

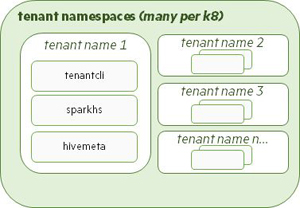

The Tenant operator contains the tenant Custom Resource Definition (CRD), which validates the Tenant Custom Resource (CR) file that the Controller uses to create the tenant pods. The Tenant operator can deploy one or more instances of a tenant namespace in the Kubernetes environment to run compute applications, such as Spark, as shown in the following illustration:

A custom Tenant CR that specifies cluster connection settings and tenant resources should be created for each HPE Ezmeral Data Fabric tenant. See Defining the Tenant Using the CR.

examples/picasso141/tenant directory. These sample files are

named hctenant-*.yaml. Sample files for connecting to an internal

Data Fabric cluster have internal in the filename, while sample

files for connecting to an external cluster have external in the

filename. Before deploying a tenant CR for an external storage cluster, you must first deploy the external cluster information and secrets that the tenant will use to connect. You may either:

- Run the

gen-external-secrets.shutility in the tools directory to gather this host information and generate various secrets. - Manually create the required information. The following sample information

templates in the

examples/picasso141/secretsdirectory can help you collect this manual information:- Secure external storage

cluster:

mapr-user-secret-secure-customer.yaml - Unsecure external storage

cluster:

mapr-user-secret-unsecure-customer.yaml

- Secure external storage

cluster:

You need not generate this information for an internal Data Fabric cluster because the system automatically obtains this information from the cluster namespace.

Defining the Tenant Using the CR

The Tenant CR should contain values for the following properties:

clustername- string - Name of either the internal Data Fabric cluster or the external storage cluster to associate with this tenant.clustertype- string - This will be eitherinternal(if the Data Fabric cluster is in the same environment as the Tenant) orexternal(if the storage cluster is outside the tenant Kubernetes environment).baseimagetag- string - The tag to use for pulling all the images.imageregistry- string - Image registry location.imagepullsecret- string - Name of the secret that contains the login information for the image repository.loglocation- string - Optional node location for storing tenant pod logs. This can be any writable location, subject to node OS restrictions. Default is/var/log/mapr/<tenant>/.corelocation- string - Optional node location for storing core tenant pod files. This can be writable location on the node. Default is/var/log/mapr/<tenant>/cores/.podinfolocation- string - (Optional top-level directory for storing persistent pod information, separated by cluster. This can be any writable location on the node, subject to node OS restrictions. Default is/var/log/mapr/<tenant>/podinfo/.security- object - See Security Object Settings.debugging-object - See Debug Settings.tenantservice- object - See Tenant Services Object Settings.tenantcustomizationfiles- object - See Tenant Customization File Object Settings.userlist- array - List of user IDs to add to the tenant Role-Based Access Control (RBAC).grouplist- array - List of group IDs to add to the Tenant RBAC.

Security Object Settings

These settings specify tenant security parameters.

- Tenants configured to use an internal Data Fabric cluster inherit security settings from the cluster.

- For tenants connecting to an external storage cluster, the storage cluster host information and user, server, and client secrets must be set up and deployed before deploying the tenant CR. See External Storage Cluster Secret Settings.

The externalClusterInfo object in the tenant CR must contain values

for the following properties if the HPE Ezmeral Data Fabric

storage cluster is not in the same environment as the tenant:

dnsdomain- string - Kubernetes cluster DNS domain suffix to use. Default iscluster.local.environmenttype- string - Kubernetes environment on which to deploy the tenant. Value must bevanilla.externalusersecret- string - Name of the secret containing the system user info for starting the pods. This secret is pulled from the hpe-externalclusterinfonamespace and can be generated bygen-external-secrets.shin thetoolsdirectory. Default ismapr-user-secret.externalconfigmap- string - Name of the secret containing the location of the external storage cluster hosts for communicating with the storage cluster. This information is pulled from thehpe-externalclusterinfonamespace. Default ismapr-external-cm.externalhivesiteconfigmap- string - Name of theconfigmapcontaining the properties from the externalhive-site.xmlfile. Thisconfigmapcan be generated bygen-external-secrets.shin thetoolsdirectory if the storage cluster is not in the same environment as the tenant. This is available in thehpe-externalclusterinfonamespace. Default ismapr-hivesite.cm.externalserversecret- string - Name of the secret containing the external server secret info for communicating with the external storage cluster. This secret can be generated bygen-external-secrets.shin thetoolsdirectory and is pulled from thehpe-externalclusterinfo namespace. Default ismapr-server-secrets.externalclientsecret- string - Name of the secret containing the client secret information for communicating with the external storage cluster. This secret can be generated bygen-external-secrets.shin thetoolsdirectory and is pulled from thehpe-externalclusterinfonamespace. Default ismapr-client-secrets.sshSecret- string - Name of the secret containing the container SSH keys. Default ismapr-ssh-secret.

Debug Settings

The debugging object of theTenant CR must contain values for the

following properties:

loglevel- string - See Bootstrap Log Levels.preservefailedpods- boolean - Whether (true) or not (false; default) to prevent pods from restarting in the event of a failure. Setting the value to true will allow you to debug pods more easily, but your cluster will lose the native Kubernetes resilience that comes from pods restarting themselves when there is trouble.wipelogs- boolean - Whether (true) or not (false; default) to remove log information at the start of a container run.

Tenant Services Object Settings

The tenantservices object of the Tenant CR specifies the following

settings:

tenantcli- Administration client launched in the tenant namespace.hivemetastore- Can be used in place of a Hive Metastore launched as a cluster-wide service. Access to this Hive Metastore is limited to users and compute engines in this tenant.spark-hs- Spark HistoryServer launched in the tenant namespace.

Each of these objects must contain values for the following properties:

image- string -tenantcli-6.1.0:<TIMESTAMP>. hivemeta-2.3:<TIMESTAMP>. spark-hs-2.4.4:<TIMESTAMP>.count- integer - Number of pod instances. Default is1.[sizing fields]- strings - See Pod Sizing Fields.loglevel- string - See Bootstrap Log Levels.

Tenant Customization File Object Settings

The following custom configuration files specified using ConfigMaps in the CR are

deployed in the hpe-templates-compute namespace and used

by pods when launching a service:

hivemetastoreconfig- string - Name of a configmap template containing Hive Metastore config files in hpe-config-compute. Default ishivemetastore-cm.sparkhsconfig- string - Name of a configmap template containing Spark HistoryServer config files in hpe-config-compute. Default issparkhistory-cm.sparkmasterconfig- string - Name of a configmap template containing Spark Master config files in hpe-config-compute. Default issparkhistory-cm.sparkuiproxyconfig- string - Name of a configmap template containing Spark UI Proxy config files in hpe-config-compute. Default issparkhistory-cm.sparkworkerconfig- string - Name of a configmap template containing Spark Worker config files in hpe-config-compute. Default issparkhistory-cm.

Creating and Deploying External Tenant Information

You must manually configure the external storage cluster host and security information when creating a tenant to connect to that cluster, including:

- External storage cluster CLDB and ZooKeeper host locations to which the tenant must connect.

- HPE Ezmeral Data Fabric user, client, and server secrets that must be created before the Tenant is created.

There are two ways to get and set this information:

- Using a script: See Automatic Method.

- Manually: See Manual Method.

Automatic Method

You can use the gen-external-secrets.sh utility in the tools directory to automatically generate a secret for both

secure and unsecure storage clusters:

-

Determine whether Hive Metastore is installed on the storage cluster. You can find the node where Hive Metastore is installed by executing the following command:

maprcli node list -filter [csvc==hivemeta] -columns name - Use

scpor another method to copytools/gen-external-secrets.shto the Hive Metastore node on storage cluster. If Hive Metastore is not installed, the copy the script to any node on the storage cluster. - Start the tool by executing either of the following commands on the

storage cluster as the admin user (typically

mapr):Unsecure external storage cluster:

su - mapr ./gen-external-secrets.shSecure external storage cluster:

./gen-external-secrets.sh

- When prompted, enter a name for the generated secret file. Default is

mapr-external-info.yaml. If you are creating tenants that connect to different external storage clusters, then these secrets must have different names because they are all deployed in the samehpe-externalclusterinfonamespace. Each tenant CR must point to the correct secret, depending on the secret name. - When prompted, enter the username and password the HPE Ezmeral Data Fabric services will use for Data Fabric

cluster administration. The default user is

mapr.To obtain the default password, see Data Fabric Cluster Administrator Username and Password.

- Specify whether the node is a Kubernetes storage node by entering either

y(storage cluster is running on a Kubernetes environment) orn(storage cluster is running on a non-Kubernetes environment). - When prompted, enter the following user secret information:

- Server

ConfigMaps: Cluster host location. Default is

mapr-external.cm. - User secret: Secret

generated for MapR system user credentials. Default is

mapr-user-secrets. - Server secret (secure

clusters only): Secret generated for the MapR maprserverticket

in

/opt/mapr/conf. Default ismapr-server-secrets. - Client secret (secure clusters

only): Secret generated for the

ssl_truststorein/opt/mapr/conf. Default ismapr-client-secrets. - Hivesite configmap:

Information from the

hive-site.xml file. Default ismapr-hivesite.cm. You may need change the settings in the generated file.

- Server

ConfigMaps: Cluster host location. Default is

- Copy the generated file to a machine that has a copy of

kubectland is able to communicate with the Kubernetes cluster hosting the external tenant. -

Deploy the secret the

hpe-externalclusterinfonamespace by executing the following command:kubectl apply -f <mapr-external-secrets.yaml>

Manual Method

You can either:

- Modify the sample

mapr-external-info-secure.yamlfile (for a secure storage cluster) ormapr-external-info-unsecure.yamlfile (for an unsecure storage cluster) inexamples/secrettemplatesto set values for the following properties. - Create a custom file.

If you are creating or modifying your own cluster secret file, then the properties described in the following sections must be set in the secret files for the external storage cluster host, user, server, and client secret information:

- External Storage Cluster User Secret Settings

- External Server Secret Settings

- External Client Secret Settings

After creating the files, deploy the secrets in the Kubernetes environment. See Deploying the External Storage Cluster Secrets.

External Storage Cluster User Secret Settings

The cluster secret file must contain valid values for the following external storage cluster user secret properties:

name- Name of the external storage cluster information.namespace- Namespace where the information is deployed.MAPR_USER- User that runs the Spark job. This must be Base64 encoded. Default ismapr.MAPR_PASSWORD- Password of the user that runs Spark job. This must be Base64 encoded. To obtain the default password, see Data Fabric Cluster Administrator Username and Password.MAPR_GROUP- Group of the user that runs the Spark job. This must be Base64 encoded. Default ismapr.MAPR_UID- User ID that runs the Spark job. This must be Base64 encoded. Default is5000.MAPR_GID- Group ID of the user that runs the Spark job. This must be Base64 encoded. Default value is5000.

External Server Secret Settings

The cluster secret file must contain valid values for the following external server secret properties:

maprserverticket- Value of themaprserverticketautomatically generated and stored in/opt/mapr/confon the secure storage cluster. This must be Base64 encoded.ssl_keystore.p12- Value of thessl_keystore.p12automatically generated and stored in/opt/mapr/confon the secure storage cluster. This must be Base64 encoded.ssl_keystore.pem- Value of thessl_keystore.pemautomatically generated and stored in/opt/mapr/confon the secure storage cluster. This must be Base64 encoded.

External Client Secret Settings

The cluster secret file must contain valid values for the following external client secret properties:

ssl_truststore- Value of thessl_truststoreautomatically generated for a secure cluster and stored in/opt/mapr/confon the secure storage cluster. This must be Base64 encoded.ssl_truststore.p12- Value of thessl_keystore.p12automatically generated and stored in/opt/mapr/confon the secure storage cluster. This must be Base64 encoded.ssl_truststore.pem- Value of thessl_keystore.pemautomatically generated and stored in/opt/mapr/confon the secure storage cluster. This must be Base64 encoded.

External Storage Cluster Host Information Settings

You can modify the mapr-external-configmap.yaml file in examples/secrettemplates to set values for the location of the

service hosts on the external storage cluster or create your own custom file. The

file must contain values for the following properties:

clustername- Name of the external storage cluster. This must be Base64 encoded.disableSecurity- Whether (true) or not (false; default) security is disabled on the storage cluster.-

cldbLocations- Base64 encoded comma-separated list of CLDB hosts on the external storage cluster in the following format:hostname|IP[:port_no][,hostname|IP[:port_no]...] -

zkLocations- Base64 encoded comma-separated list of ZooKeeper hosts on the external storage cluster in the following format:hostname|IP[:port_no][,hostname|IP[:port_no]...] -

esLocations- Base64 encoded comma-separated list of Elasticsearch hosts on the external storage cluster in the following format:hostname|IP[:port_no][,hostname|IP[:port_no]...] -

tsdbLocations - Base64 encoded comma-separated list of openTSDB hosts on the external storage cluster in the following format:

hostname|IP[:port_no][,hostname|IP[:port_no]...] -

hivemetaLocations- Base64 encoded comma-separated list of Hive Metastorage hosts on the external storage cluster in the following format:hostname|IP[:port_no][,hostname|IP[:port_no]...]

Deploying the External Storage Cluster Secrets

After creating the files, deploy the secrets and configmaps by executing the following command:

kubectl apply -f <mapr-external-cluster-info-file.yaml>Deploying the Data Fabric Tenant

You must have either of the following before deploying a tenant:

- Running internal Data Fabric cluster. Wait until the cluster is fully started so that cluster settings can be configured on the tenant.

- Running external storage cluster. You must have already

created information about that cluster in the

hpe-externalclusterinfonamespace.

To create the Tenant namespace in the Kubernetes environment:

- Either create a new tenant CR or modify an existing sample, as described in Defining the Tenant Using the CR.

-

Create the Tenant using the tenant CR by executing the following command:

kubectl apply -f <path-to-tenant-resource-yaml-file> -

Run the following command to verify that the tenant has been created by executing the following command:

kubectl get pods -n <tenant-namespace>

You can now use the Spark operator to deploy Spark applications in the tenant namespace.